Control Award

Tags: controlPersonhours: 3

Task:

Last Saturday, after our qualifier, we had a team meeting where we created a list of what we needed to do before our second qualifier this Saturday. One of the tasks was to create the control award which we were unfortunately unable to complete in time for our last competition.

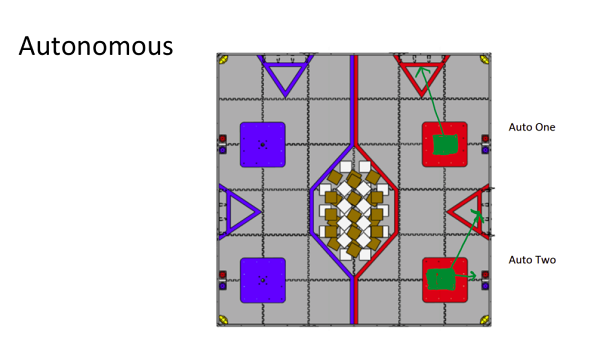

Autonomous Objective:

- Knock off opponent's Jewel, place glyphs In correct location based on image, park in safe zone (85 pts)

- Park in Zone, place glyph in cryptobox (25 pts)

Autonomous B has the ability to be delayed for a certain amount of time, allowing for better coordination with alliance mates. If our partner team is more reliable, we can give them freedom to move, but still add points to our team score.

Sensors Used

- Phone Camera - Allows the robot to determine where to place glyphs using Vuforia, taking advantage of the wide range of data provided from the pattern detection, as well as using Open Computer Vision (OpenCV) to analyze the pattern of the image.

- Color Sensor - Robot selects correct jewel using the passive mode of the sensor. This feedback allows us determine whether the robot needs to move forwards or backwards so that it knocks off the opposing teams jewel

- Inertial Measurement Unit (IMU) - 3 Gyroscopes and Accelerometers return the robot’s heading for station keeping and straight-line driving in autonomous, while letting us orient ourselves to specific headings for proper navigation, crypt placing, and balancing

- Motor Encoders - Using returned motor odometry, we track how many rotations the wheels have made and convert that into meters travelled. We use this in combination with feedback from the IMU to calculate our location on the field relative to where we started.

Key Algorithms:

- Integrate motor odometry, the IMU gyroscope, and accelerometer with using trigonometry so the robot knows its location at all times

- Use Proportional/Integral/Derivative (PID) combined with IMU readouts to maintain heading. The robot corrects any differences between actual and desired heading at a power level appropriate for the difference and amount of error built up. This allows us to navigate the field accurately during autonomous.

- We use Vuforia to track and maintain distance from the patterns on the wall based on the robot controller phone's camera. It combines 2 machine vision libraries, trig and PID motion control.

- All code is non-blocking to allow multiple operations to happen at the same time. We extensively use state machines to prevent conflicts over priorities in low-level behaviors

Driver Controlled Enhancements:

- If the lift has been raised, movement by the jewel arm is blocked to avoid a collision

- The robot has a slow mode, which allows our drivers to accurately maneuver and pick up glyphs easily and accurately.

- The robot also has a turbo mode. This speed is activated when the bumper is pressed, allowing the driver to quickly maneuver the field.

Date | November 15, 2017