Beginning Auto Stone

Tags: controlPersonhours: 12

Task: Design an intake for the stones based on wheels

Initial Design: Rolling IntakeWe've been trying to get our start on autonomous today. We are still using FrankenDroid (our R12D mecanum drive test bot) because our competition bot is taking longer than we wanted. We just started coding, so we are just trying to learn how to use the statemachine class that Arjun wrote last year. We wanted to make a skeleton of a navigation routine that would pick up and deposit two skystones, although we ran into 3 different notable issues.

Problem #1 - tuning Crane presetsWe needed to create some presets for repeatable positions for our crane subystem. Since we output all of that to telemetry constantly, it was easy to position the crane manually and see what the encoder positions were. We were mostly focusing on the elbow joints position, since the extension won't come into play until we are stacking taller towers. The positions we need for auto are:

- BridgeTransit - the angle the arm needs to be to fit under the low skybridge

- StoneClear - the angle that positions the gripper to safely just pass over an upright stone

- StoneGrab - the angle that places the intake roller on the skystone to begin the grab

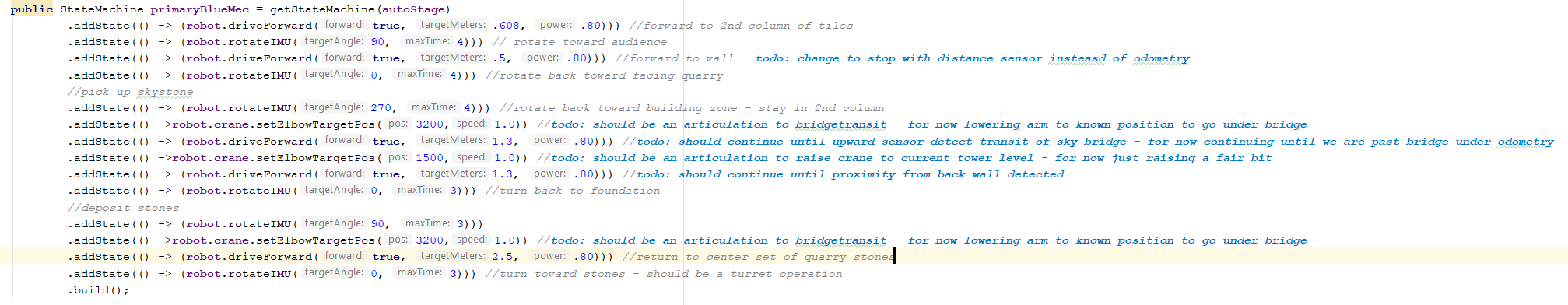

I've never used lambda expressions before, so that was a bit of a learning curve. But once I got the syntax down, it was pretty easy to get the series of needed moves into the statemachine builder. The sequence comes from our auto planning post. The code has embedded comments to explain what we are trying to do:

Problem #3 - REV IMU flipped?

This was the hard one. We lost the last 1.5 hours of practice trying to figure this out, so we didn't get around to actually picking up any stones. We figured that our framework code couldn't have this wrong because it's based on last year's code and the team has been using imu-based navigation since before Karina started. So we thought it must be right and we just didn't know how to use it.

The problem started with our turn navigation. We have a method called rotateIMU for in-place turns which just takes a target angle and a time limit. But the robot was turning the wrong way. We'd put in a 90 degree target value expecting it to rotate clockwise looking down at it, but it would turn counter clockwise and then it would oscillate wildly. Which at least looked like it found a target value. It just looked like very badly tuned PID overshoot and since we hadn't done PID tuning for this robot yet, we decided to start with that. That was a mistake. We ended up damping the P constant down so much until it had the tiniest effect and the oscillation never went away.

We have another method built into our demo code called maintainHeading. Just what it sounds like, this lets us put the robot on a rotating lazy susan and it will use the imu to maintain it's current heading by rotating counter to the turntable. When we played with this it became clear the robot was anti-correcting. So we looked more closely at our imu outputs and yes, they were "backwards." Turning to the left increased the imu yaw when we expected turning to the right would do that.

We have offset routines that help us with relative turns so we suspected the problem was in there. however, we traced it all the way back to the raw outputs from the imuAngles and found nothing. The REV Control Hub is acting like the imu module is installed upside down. We also have an Expansion Hub on the robot and that behaves the same way. This actually triggered a big debate about navigation standards between our mentors and they might write about that separately. So in the end, we went with the interpretation that the imu is flipped. Therefore, the correction was clear-- either to change our bias for clockwise therefore increasing in our relative turns, or to flip the imu output. We decided to flip the imu output and the fix was as simple as inserting the "360-" to this line of code:

poseHeading = wrapAngle(360-imuAngles.firstAngle, offsetHeading);

So the oscillation turned out to be at 180 degrees to the target angle. That's because the robot was anti-correcting but still able to know it wasn't near the target angle. At 180 it flips which direction it thinks it should turn for the shortest path to zero error, but the error was at its maximum, so the oscillation was violent. Once we got the heading flipped correctly, everything started working and the PID control is much better with the original constants. Now we could start making progress again.

Though, the irony here is that we might end up mounting one of our REV hubs upside down on our competition robot. In which case we'll have to flip that imu back.

Next Steps1)Articulating the Crane- We want to turn our Crane presets into proper articulations. Last year we built a complicated articulation engine that controlled that robot's many degrees of freedom. We have much simpler designs this year and don't really need a complicated articulation engine. But it has some nice benefits like set and forget target positions so the robot can be doing multiple things simultaneously from inside a step-by-step state machine. Plus since it is so much simpler this year and we have examples, the engine should be easier to code.

2)Optimization- Our first pass at auto takes 28 seconds and that's with only 1.5 skystone runs and not even picking the first skystone up or placing it. And no foundation move or end run to the bridge. And no vision. We have a long way to go. But we are also doing this serially right now and we can recover some time when we get our crane operating in parallel with navigation. We're also running a .8 speed so can gain a bit there.

3)Vision- We've played with both the tensor flow and vuforia samples and they work fairly well. Since we get great positioning feedback from vuforia we'll probably start with that for auto skystone retrieval.

4)Behaviors- we want to make picking up stones an automatic low level behavior that works in auto and teleop. The robot should be able to automatically detect when it is over a stone and try to pick it up. We may want more than just vision to help with this. Possibly distance sensors as well.

5)Wall detection- It probably makes sense to use distance sensors to detect distance to the wall and to stones. Our dead reckoning will need to be corrected as we get it up to maximum speed.