Positioning Algorithms

Tags: control, software, and journalPersonhours: 10

Task: Determine the robot’s position on the field with little to no error

With the rigging and the backdrops being introduced as huge obstacles to pathing this year, it’s absolutely crucial that the robot knows exactly where it is on the field. Because we have a Mecanum drivetrain, the included motor encoders slip too much for the fine-tuned pathing that we’d like to do. This year, we use as many sensors (sources of truth) as possible to localize and relocalize the robot.

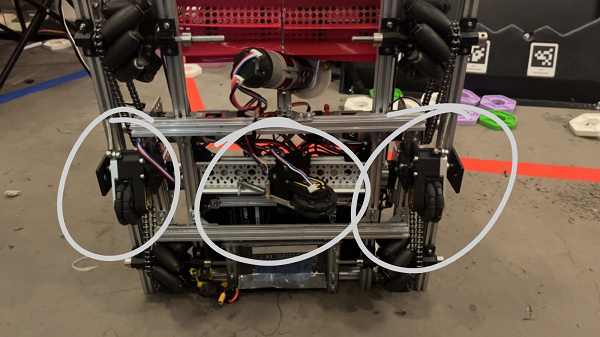

Odometry Pods (Deadwheels)

There are three non-driven (“dead”) omni wheels on the bottom of our robot that serve as our first source of truth for position.

The parallel wheels serve as our sensors for front-back motion, and their difference in motion is used to calculate our heading. The perpendicular wheel at the center of our robot tells us how far/in what direction we’ve strafed. Because these act just like DC Motor encoders, they’re updated with the bulk updates roughly every loop, making them a good option for quick readings.

IMU

The IMU on the Control Hub isn’t subject to field conditions, wheel alignment or minor elevation changes, so we use it to update our heading every two seconds. This helps avoid any serious error buildup in our heading if the dead wheels aren’t positioned properly. The downside to the IMU is that it’s an I2C sensor, and polling I2C sensors adds a full 7ms to every loop, so periodic updates help us balance the value of the heading data coming in and the loop time to keep our robot running smoothly.

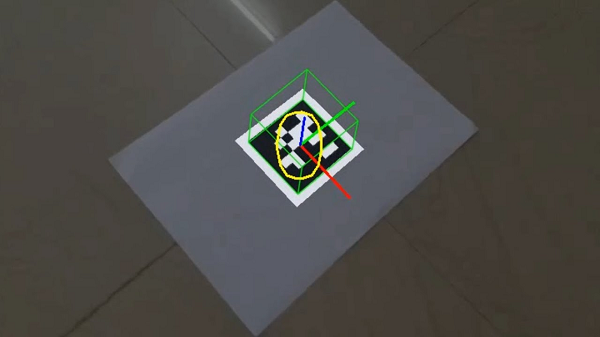

AprilTags

AprilTags are a new addition to the field this year, but we’ve been using them for years and have built up our own in-house detection and position estimation algorithms. AprilTags are unique in that they convey a coordinate grid in three-dimensional space that the camera can use to calculate its position relative to the tag.

Distance Sensors

We also use two REV distance sensors mounted to the back of our chassis. They provide us with a distance to the backdrop, and by using the difference in their values, we can get a pretty good sense of our heading. The challenge with these, however, is that, like the IMU, they’re super taxing on the Control Hub and hurt our loop times significantly, so we’ve had to dynamically enable them only when we’re close enough to the backdrop to get a good reading.