Vision Discussion

Task: Consider potential vision approaches for sampling

Part of this year’s game requires us to be able to detect the location of minerals on the field. The main use for this is in sampling. During autonomous, we need to move only the gold mineral, without touching the silver minerals in order to earn points for sampling. There are a few ways we could be able to detect the location of the gold mineral.

First, we could possibly use OpenCV to run transformations on the image that the camera sees. We would have to design an OpenCV pipeline which identifies yellow blobs, filters out those that aren’t minerals, and finds the centers of the blobs which are minerals. This is most likely the approach that many teams will use. The benefit of this approach is that it will be easy enough to write. However, it may not work in different lighting conditions that were not tested during the designing of the OpenCV pipeline.

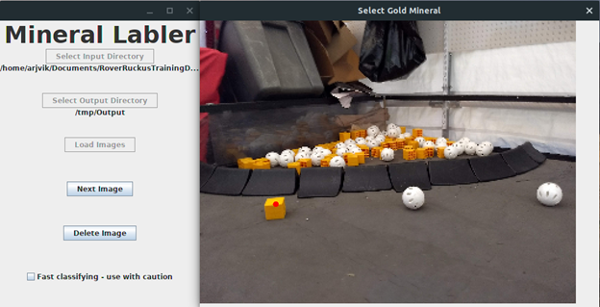

Another approach is to use Convolutional Neural Networks (CNNs) to identify the location of the gold mineral. Convolutional Neural Networks are a class of machine learning algorithms that “learn” to find patterns in images by looking at large amounts of samples. In order to develop a CNN to identify minerals, we must take lots of photos of the sampling arrangement in different arrangements (and lighting conditions), and then manually label them. Then, the algorithm will “learn” how to differentiate gold minerals from other objects on the field. A CNN should be able to work in many different lighting conditions, however, it is also more difficult to write.

Next Steps

As of now, Iron Reign is going to attempt both methods of classification and compare their performance.