League Meet #3 Review

By Aarav, Anuhya, Gabriel, Leo, Vance, Trey, and Georgia

Task: Review our performance at the 3rd League Meet and discuss possible next steps

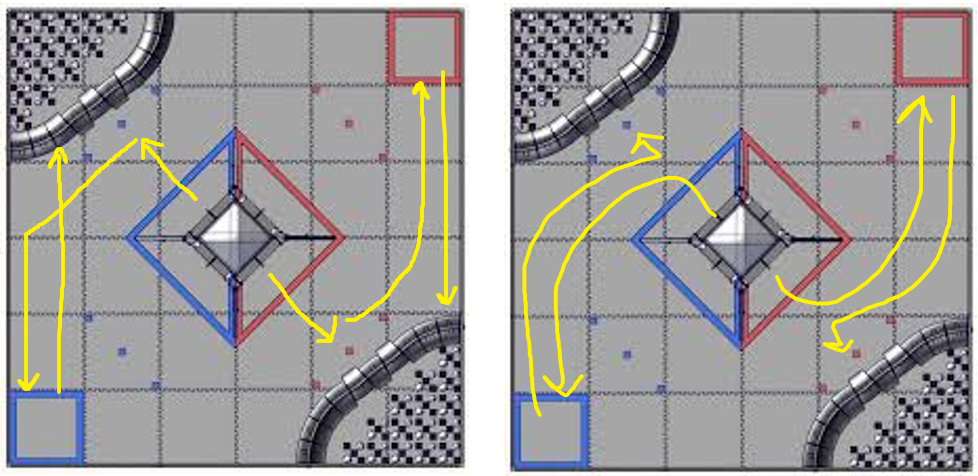

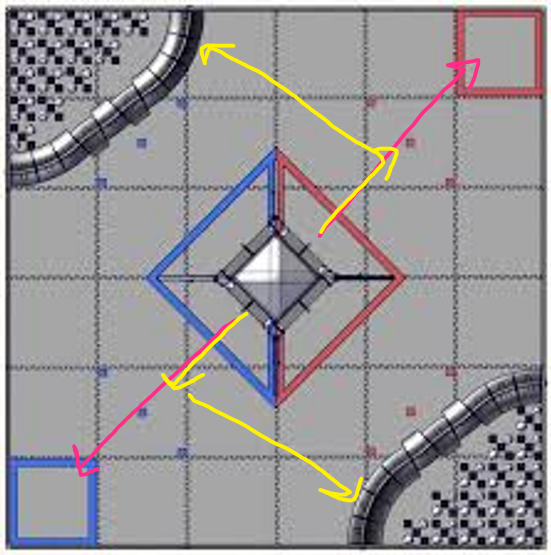

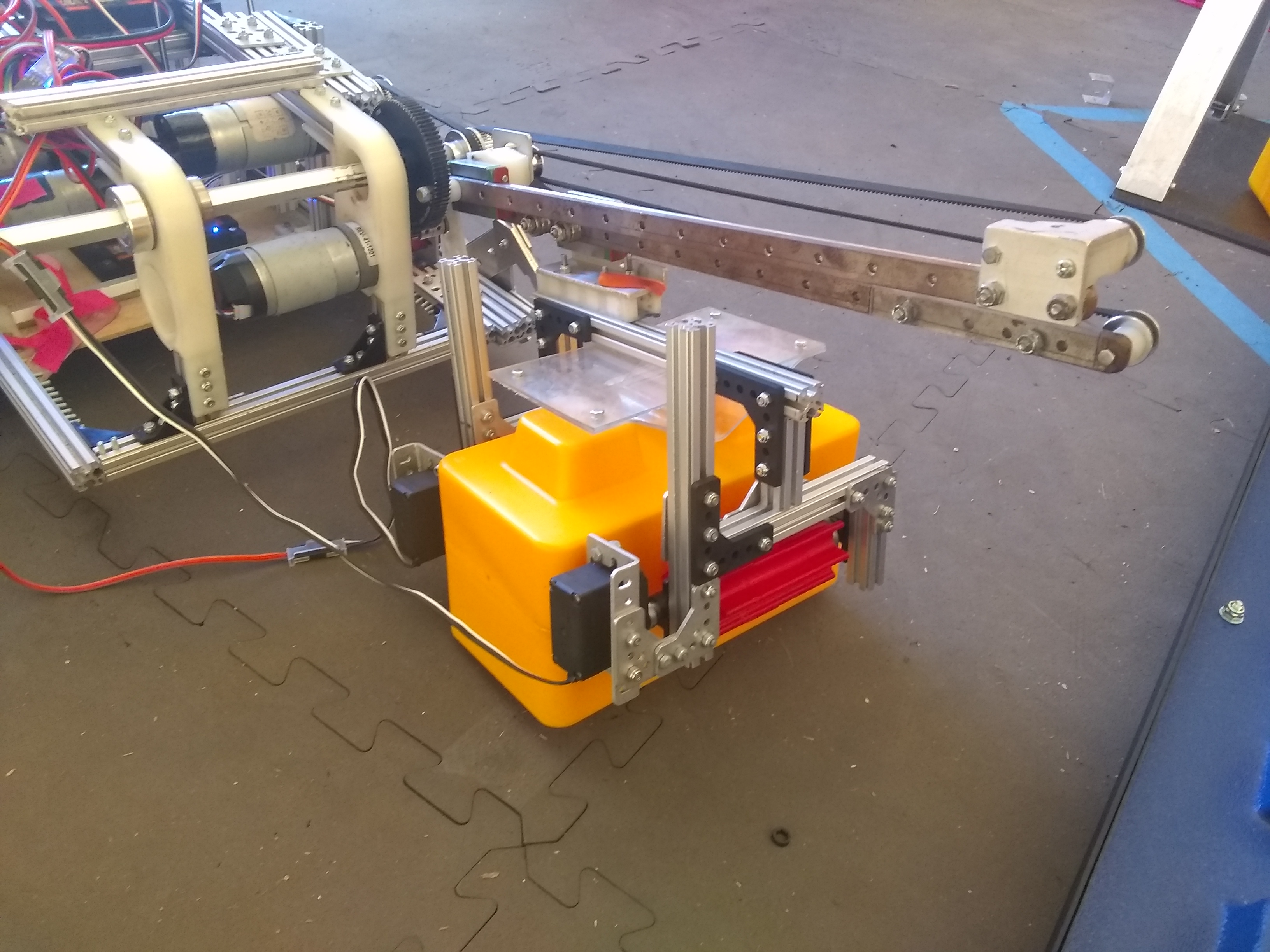

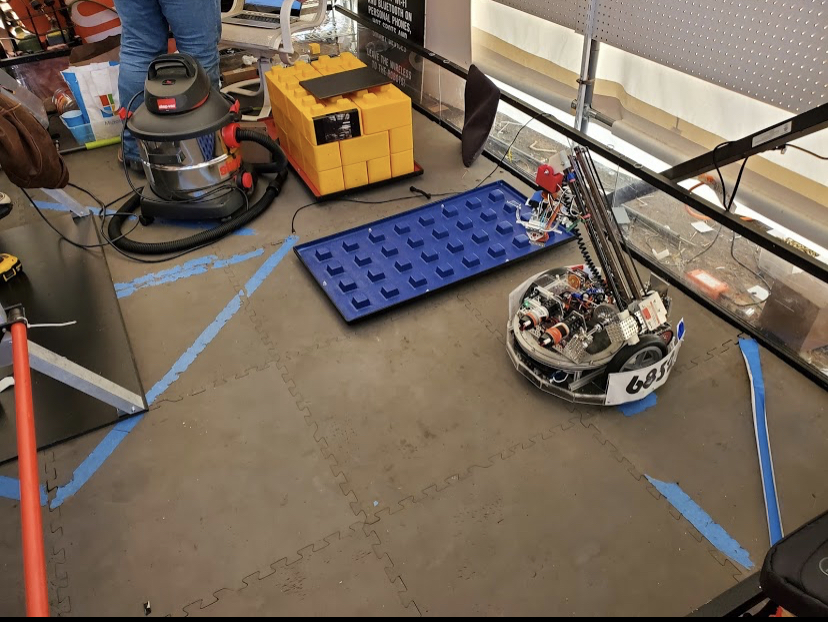

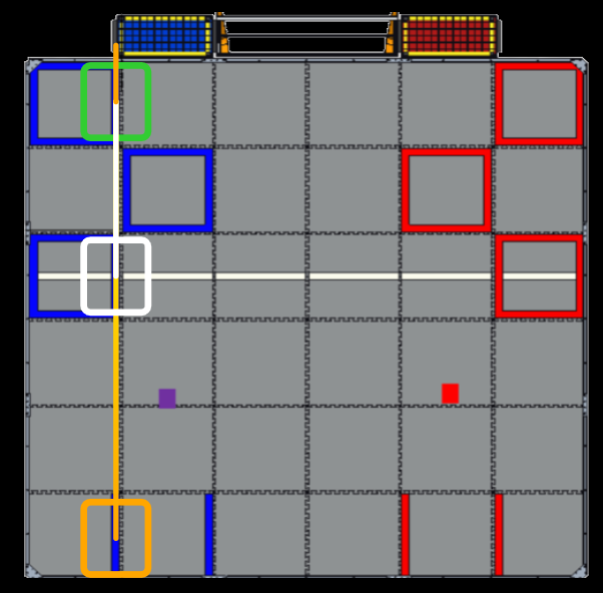

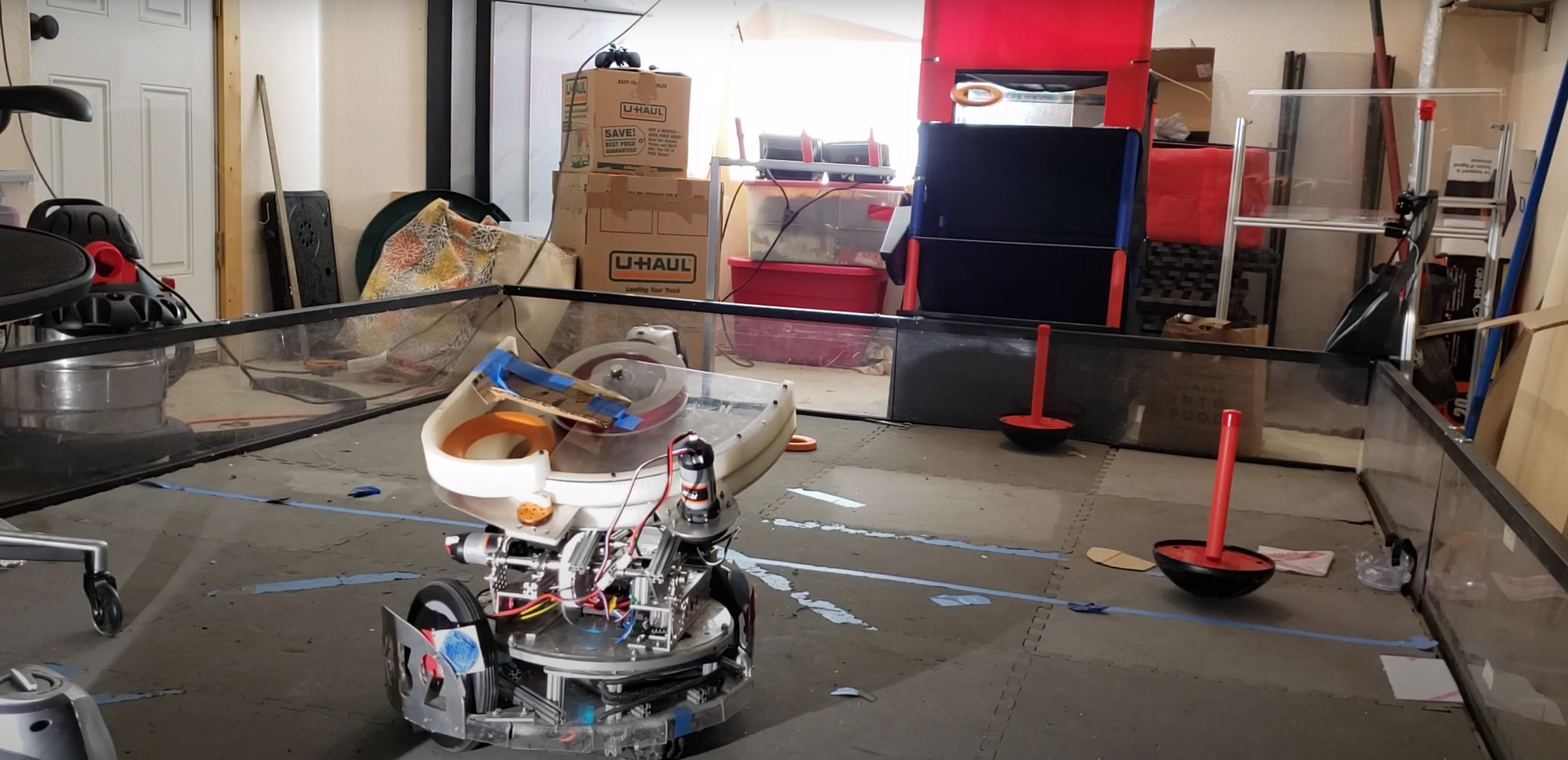

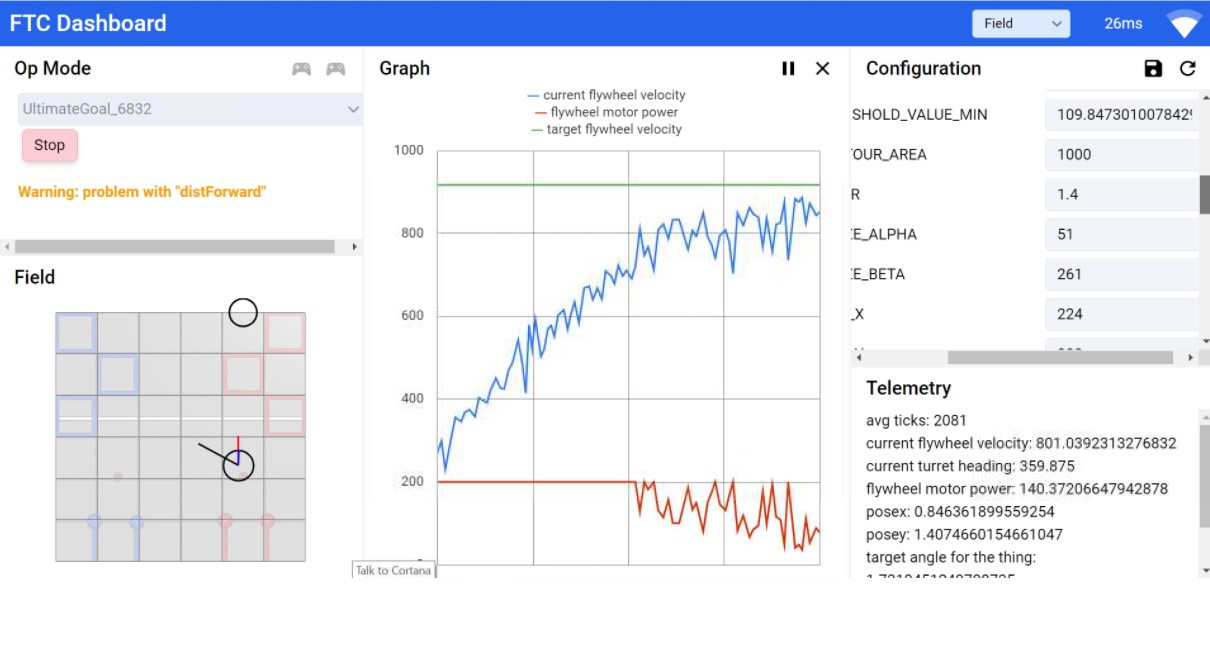

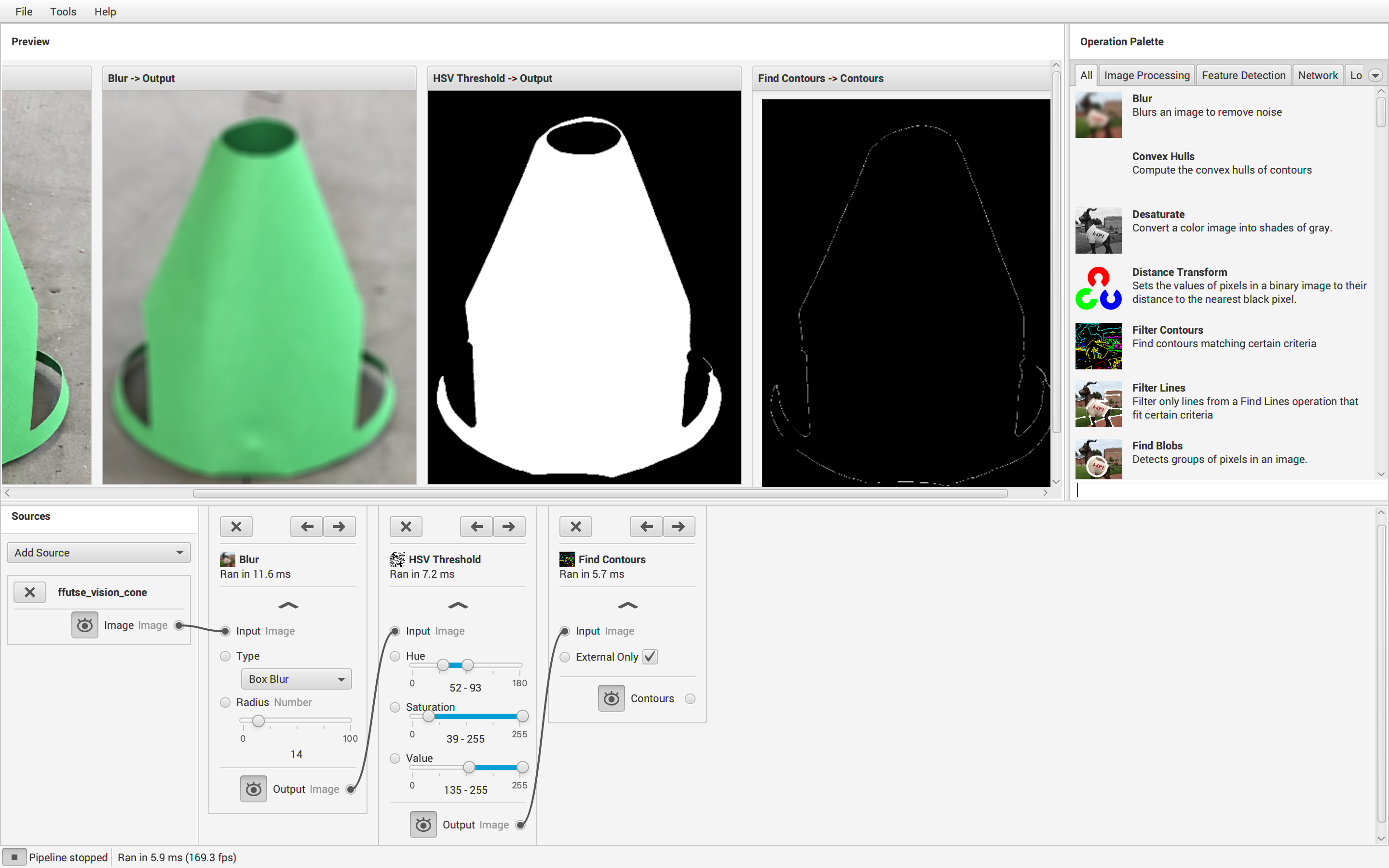

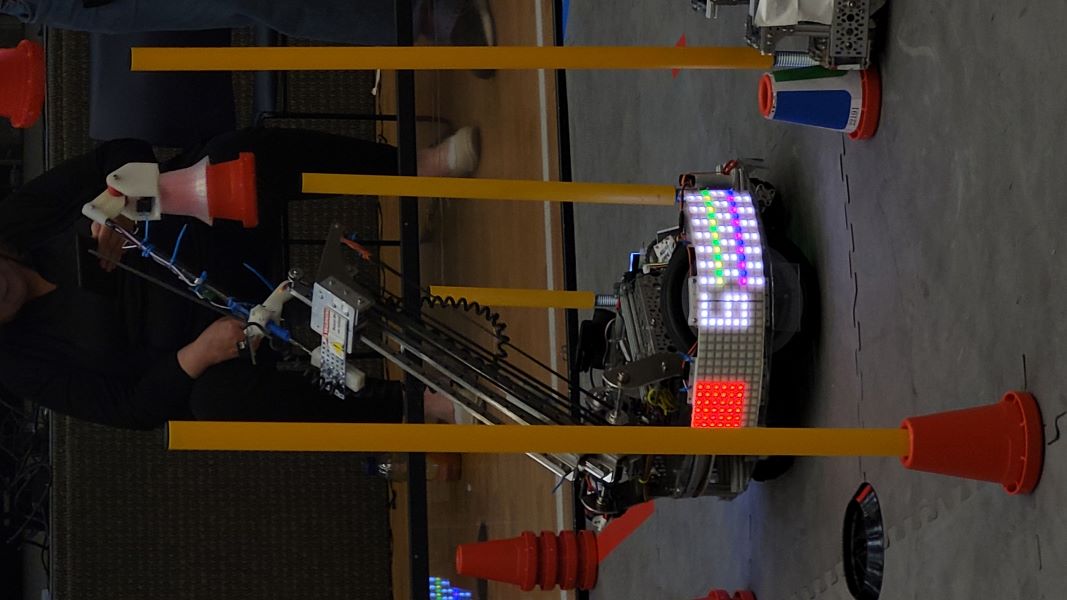

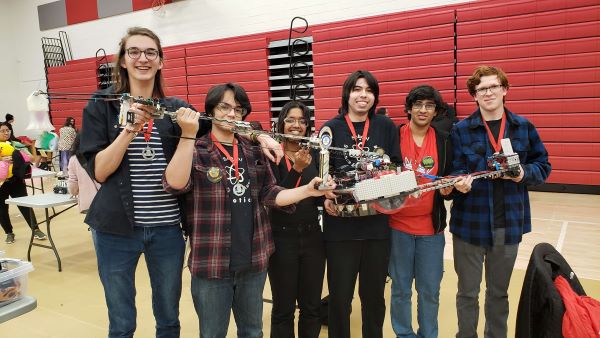

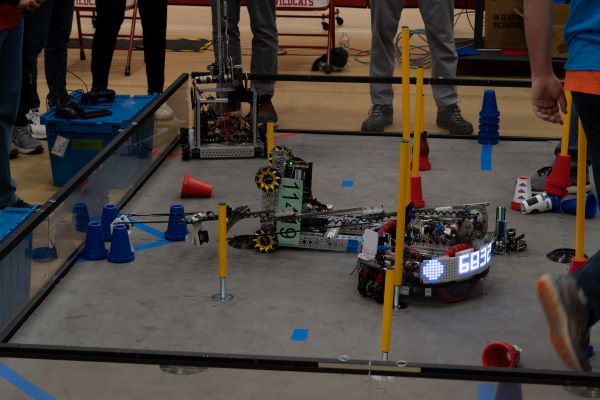

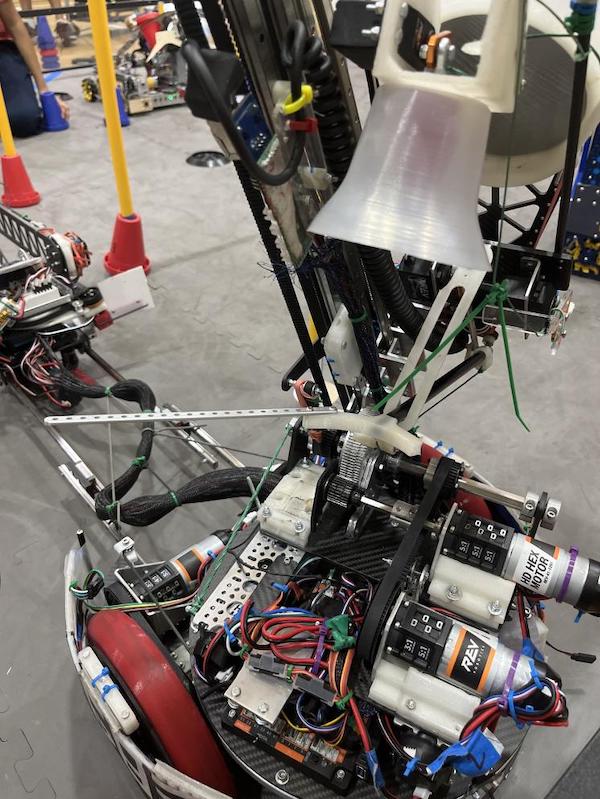

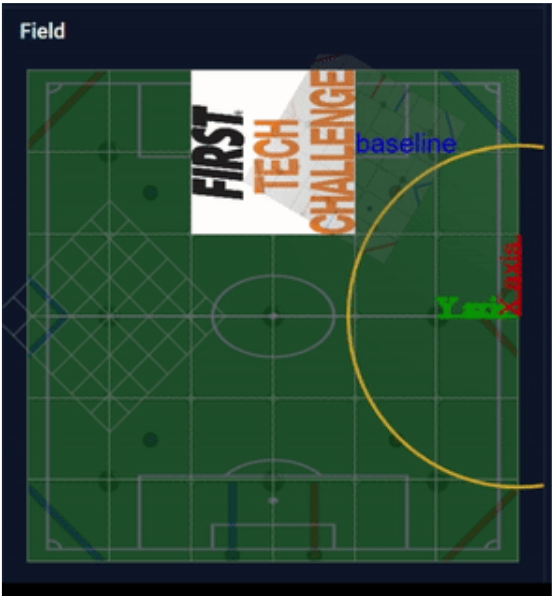

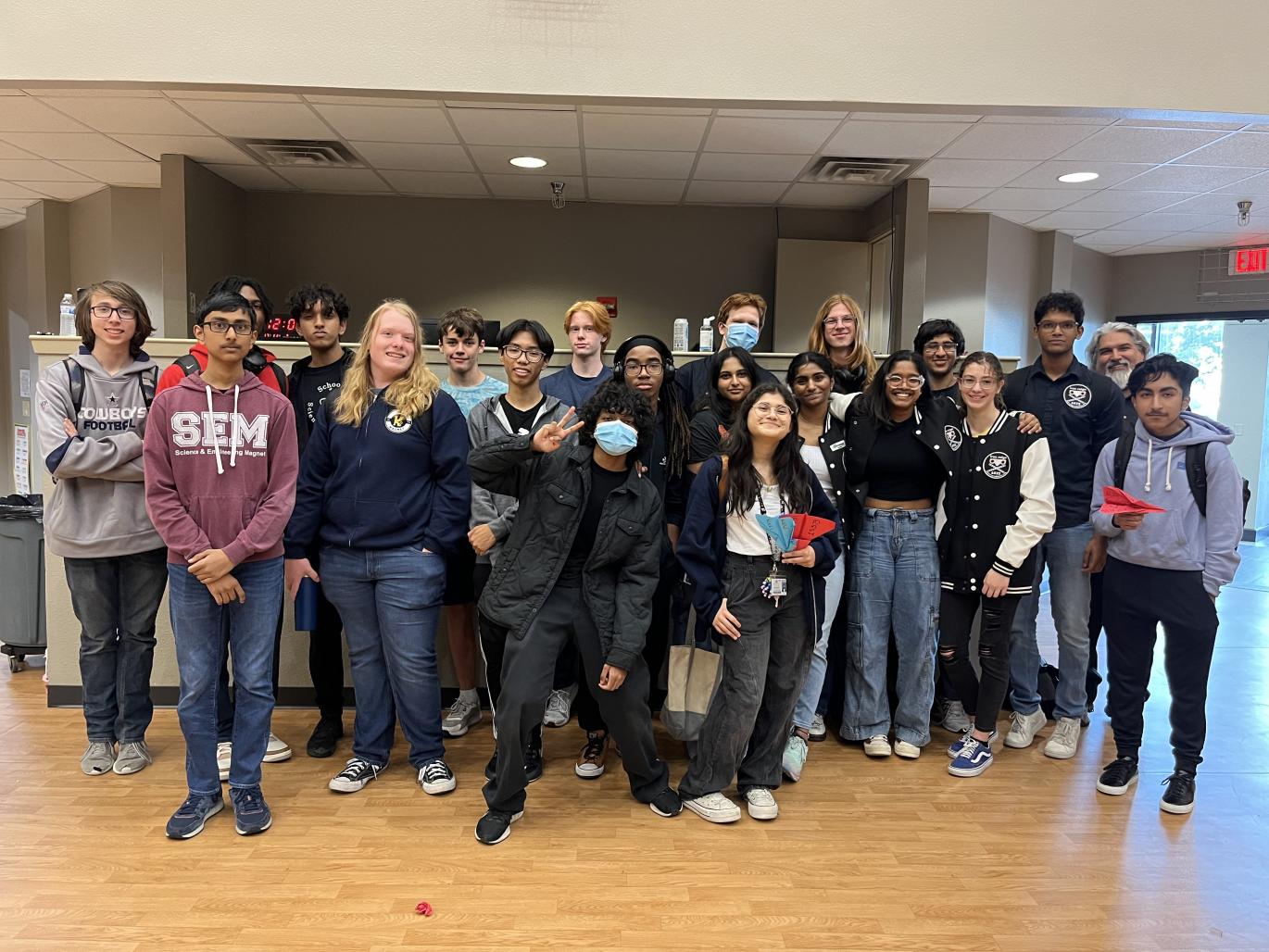

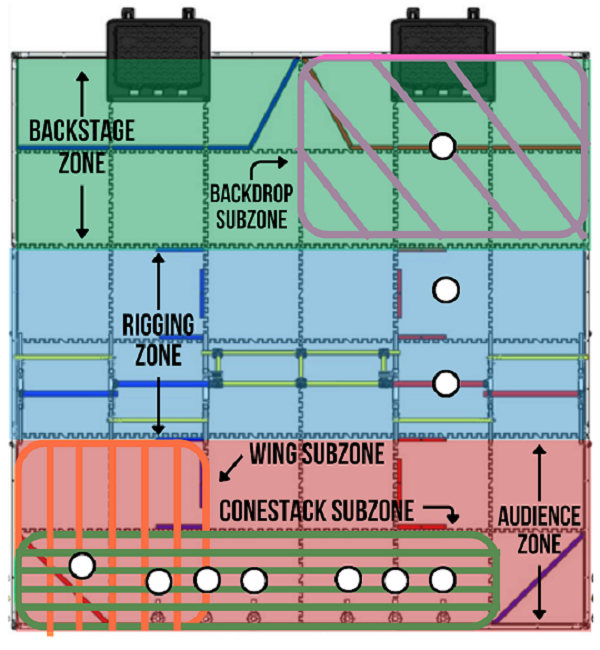

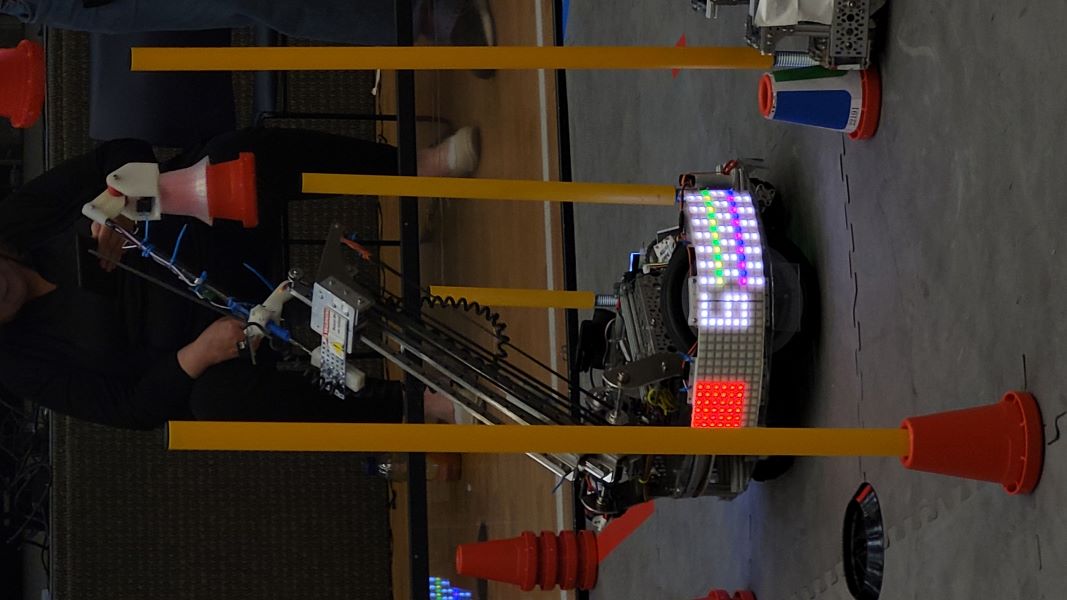

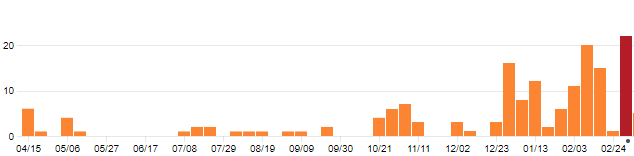

Today, Iron Reign and our two sister teams participated in the 3rd League Meet for the U League at UME Preparatory for qualification going into the Tournament next week. Overall, we did solid, going 4-2 at the meet; however, we lost significant tiebreaker points in autonomous points due to overall unreliability. At the end of the day, we didn’t do as great as we would have liked, but we did end the meet ranked #2 in the U League in #5 between both the D and U Leagues, and got some valuable driver practice and code development along the way.

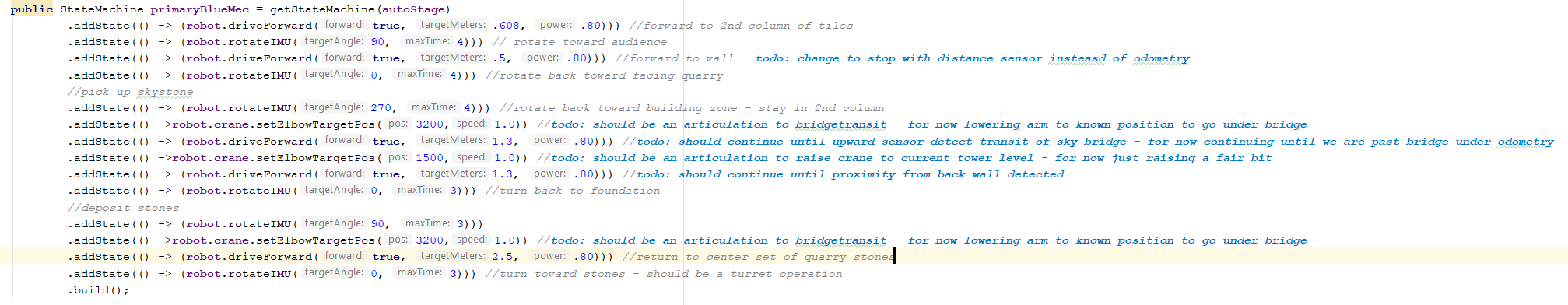

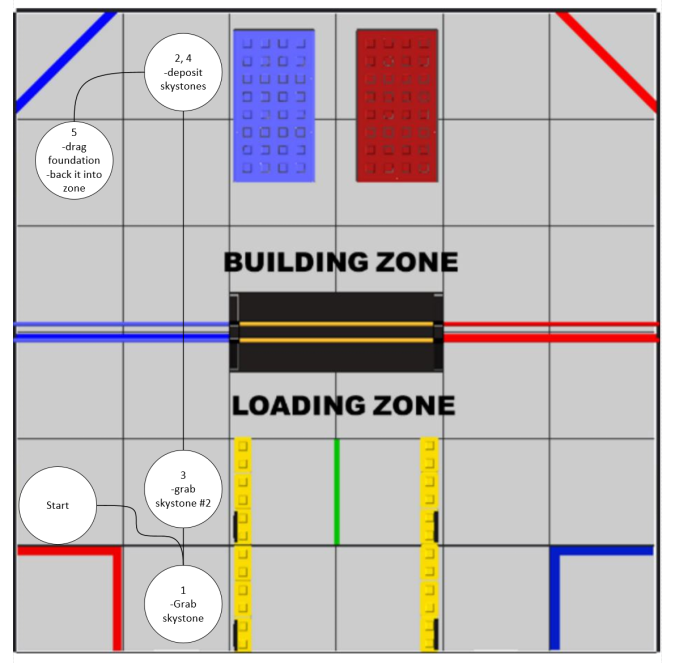

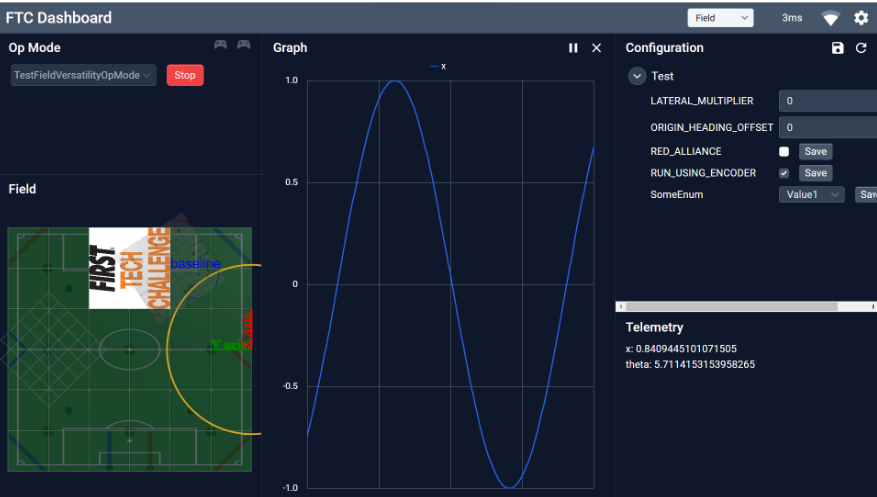

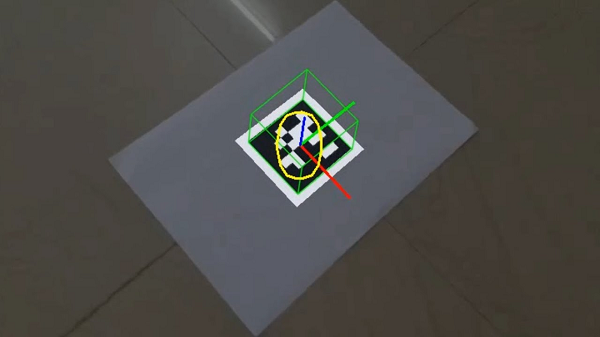

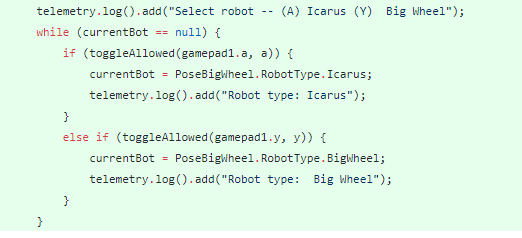

We entered the meet with brand new autonomous code that allowed us to ideally score three cones in autonomous and park, equating to about 35 points.

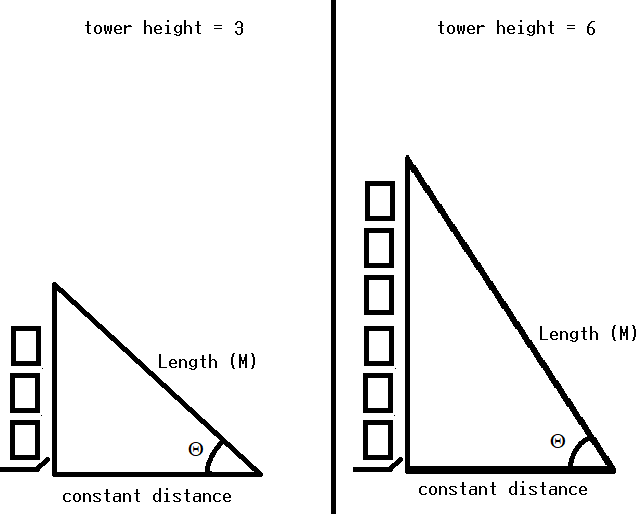

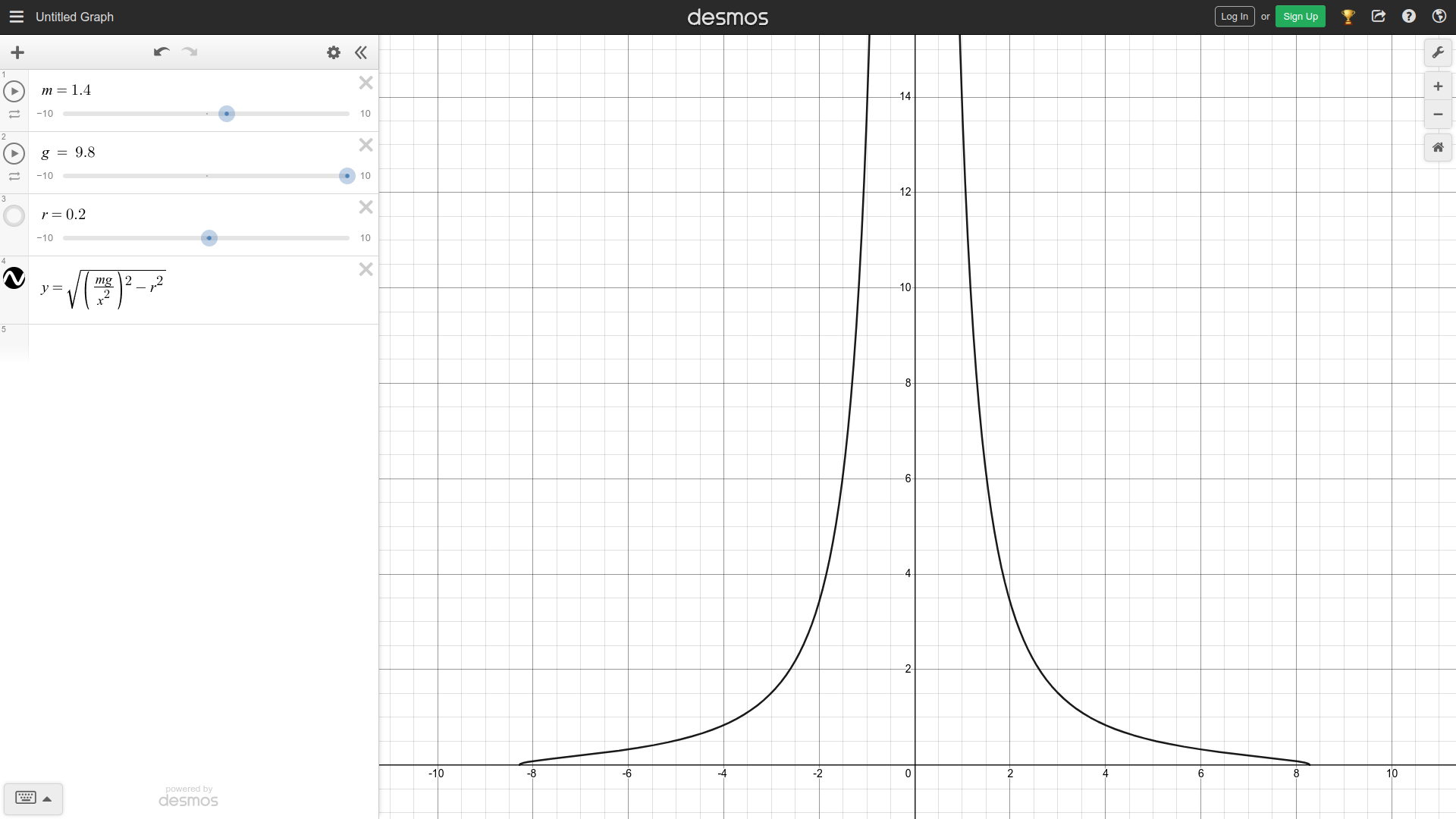

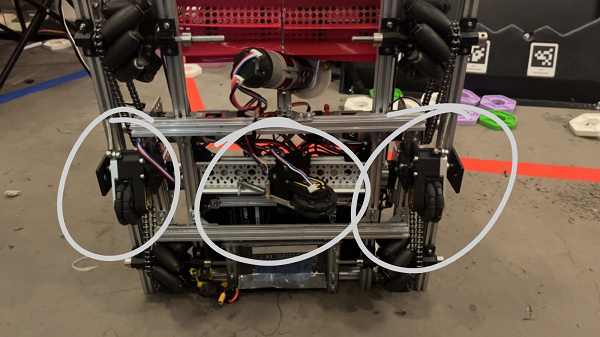

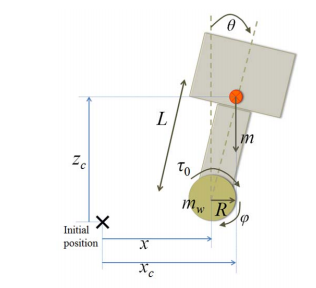

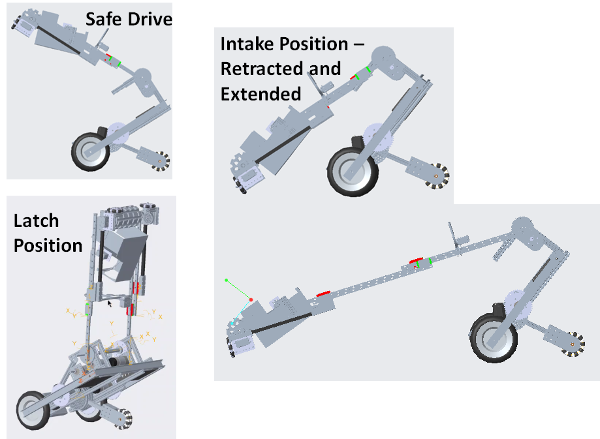

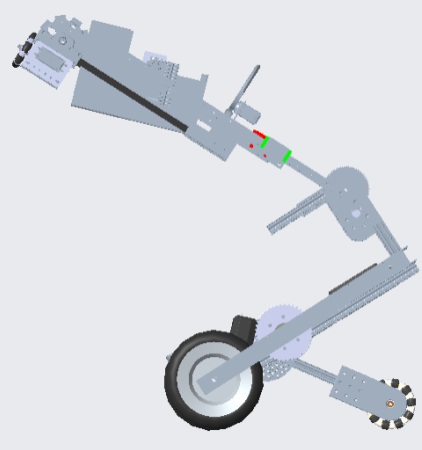

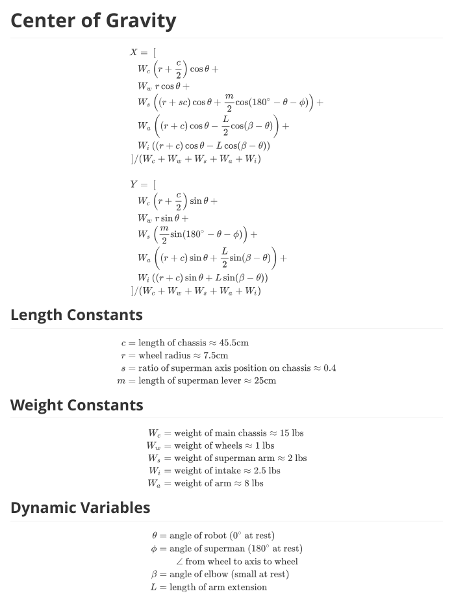

Another main issue that plagued us was tipping, as our robot tipped over in practice and in an actual match due to the extension of the arm and constant erratic movement, which we will elaborate on in the play-by-play section.

We also want to preface that much of our code and drive teams were running on meager amounts of sleep due to late nights working on TauBot2, so a lot of the autonomous code and river error can be attributed to fatigue caused by sleep deprivation. Part of our takeaways from this meet is to avoid late nights as much as possible, stick to a timeline, and get the work done beforehand.

Play by Play

Match 1: 46 to 9 Win

In the autonomous section, our robot missed the initial preload cone and fell short of grabbing cones from the cone stack because there was an extra cone. Finally, our robot overshot the zone and got no parking points. Overall, we scored 0 points.

In the tele-op and endgame sections, because of a poor quality battery that was overcharged at around 13.9 volts, our entire shoulder and arm stopped functioning and just stood stationary for the whole period. In the end, we only scored 2 points by parking in the corner. However, due to the extra cone on the cone stack at the beginning of autonomous, we were granted a rematch.

In the autonomous of the rematch, our preload cone dropped to the side of the tall pole, but we could grab and score one cone on the tall pole, missing the second one. However, it did not fully move into Zone 3, meaning we didn’t get any parking points. Overall, 5 points in auton.

In the tele-op and endgame, we scored two cones on the tall poles and one on a medium pole, which equates to 20 points in that section, including six ownership points.

Total Points: 29

Analysis: We need to ensure that our battery does not have too high of a voltage because that causes severe performance impacts. More autonomous tuning is also required to score both cones and properly park. Our light battery also died in the middle of the second match due to low charge, which will need to be taken care of in the future since that can lead to major penalties.

Match 2: 68 to 63 Loss

In the autonomous section, our robot performed great and went according to plan, scoring the preload cone and 2 more on the tallest cone and parking, equating to 35 points.

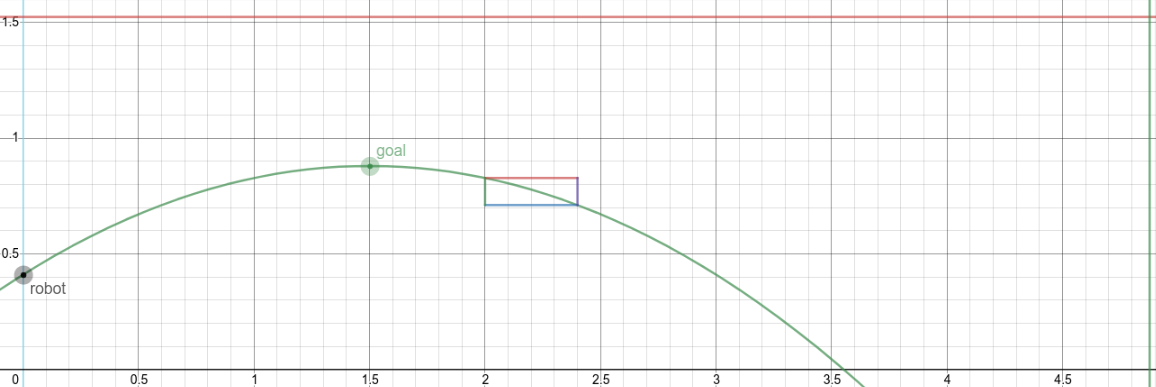

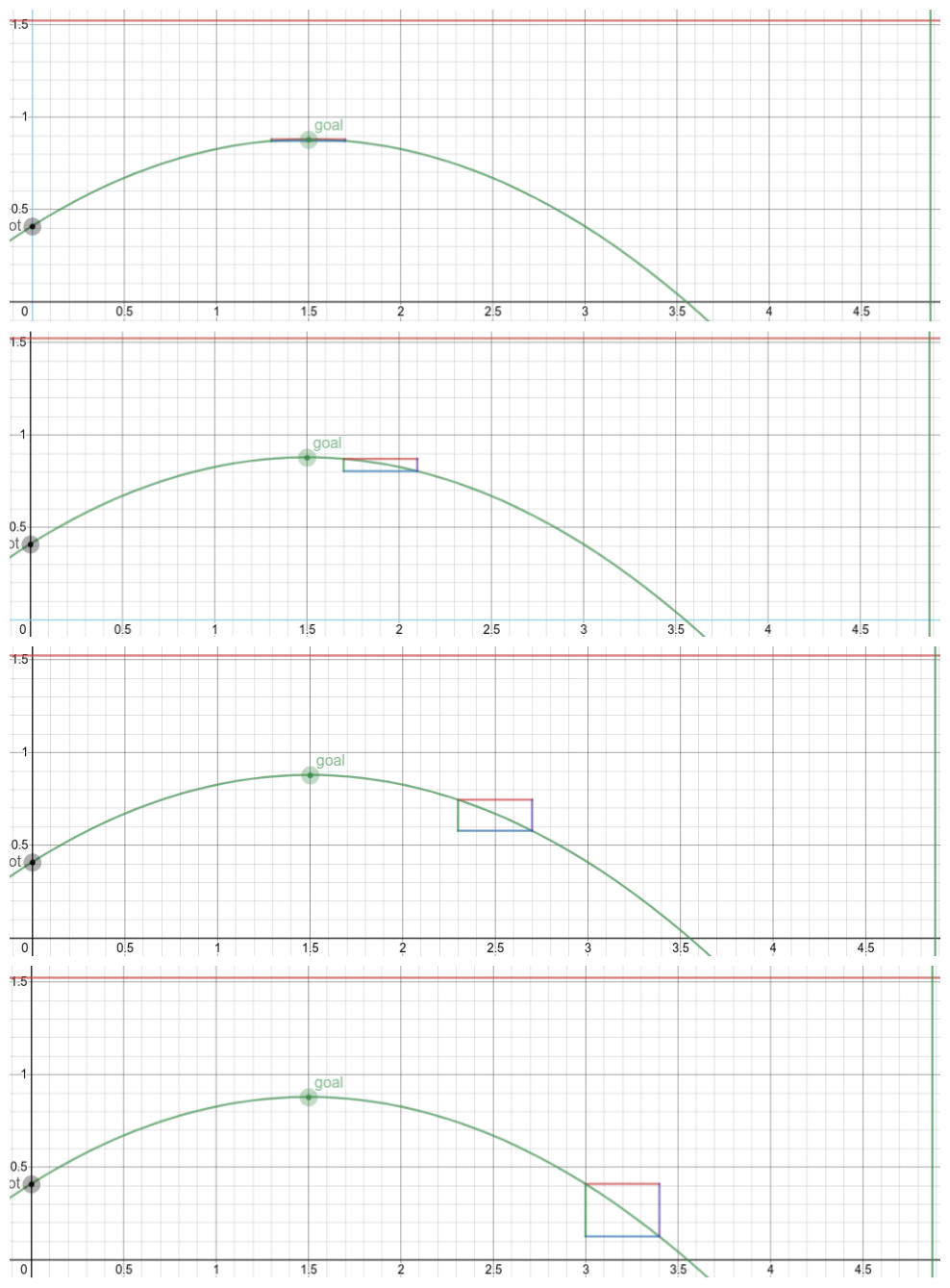

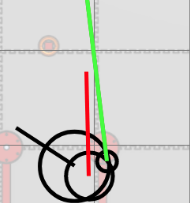

However, in the tele-op and endgame, the robot’s issues reared their ugly heads. We did score one cone on a tall cone and one on a medium cone. However, after our opponent took ownership of one of our tall poles, we went for another pole instead of taking it back, and that led to us tipping over as the arm extended, which ended the game for us. We did not incur any penalties but had we scored that cone and taken back our pole, we would have won and gotten to add a perfect autonomous to our tiebreaker points. We ended up scoring 9 points in this section.

Total Points: 44

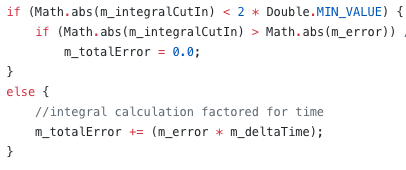

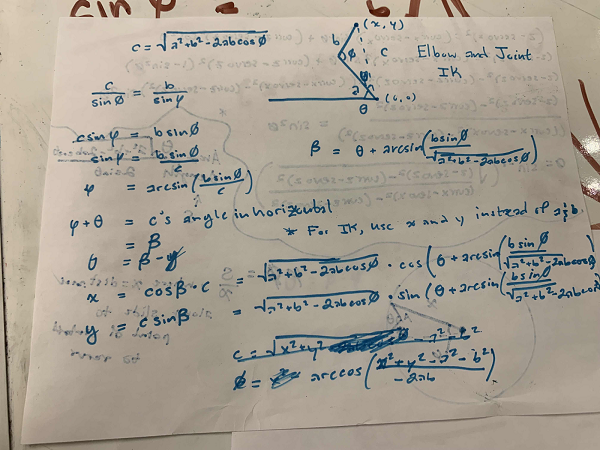

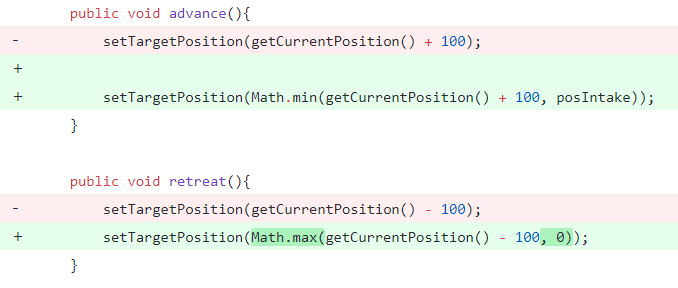

Analysis: Excellent autonomous performance marred by a tipping issue and sub-par driver performance. The anti-tipping code did not work as intended, and that issue will need to be fixed to allow TauBot to score at its entire range.

Match 3: 78 to 31 Win

In the autonomous section, we missed the preload cone and couldn’t grab both the cones on the stack, and we also did not park as our arm and shoulder crossed the border. As a result, we scored 0 points total in this section.

In the tele-op and endgame section, we scored a lot better, scoring two cones on the tall poles, one on the medium poles, and two on the short ones. This, including our 15 ownership points, equates to a total of 35 points.

Total Points: 35

Analysis: The autonomous does need tuning to at least park because of the value of autonomous points. Our lack of driver practice is also slightly evident in the time it takes to pick up new cones, but that can be solved through increased gameplay.

Match 4: 40 to 32 Win

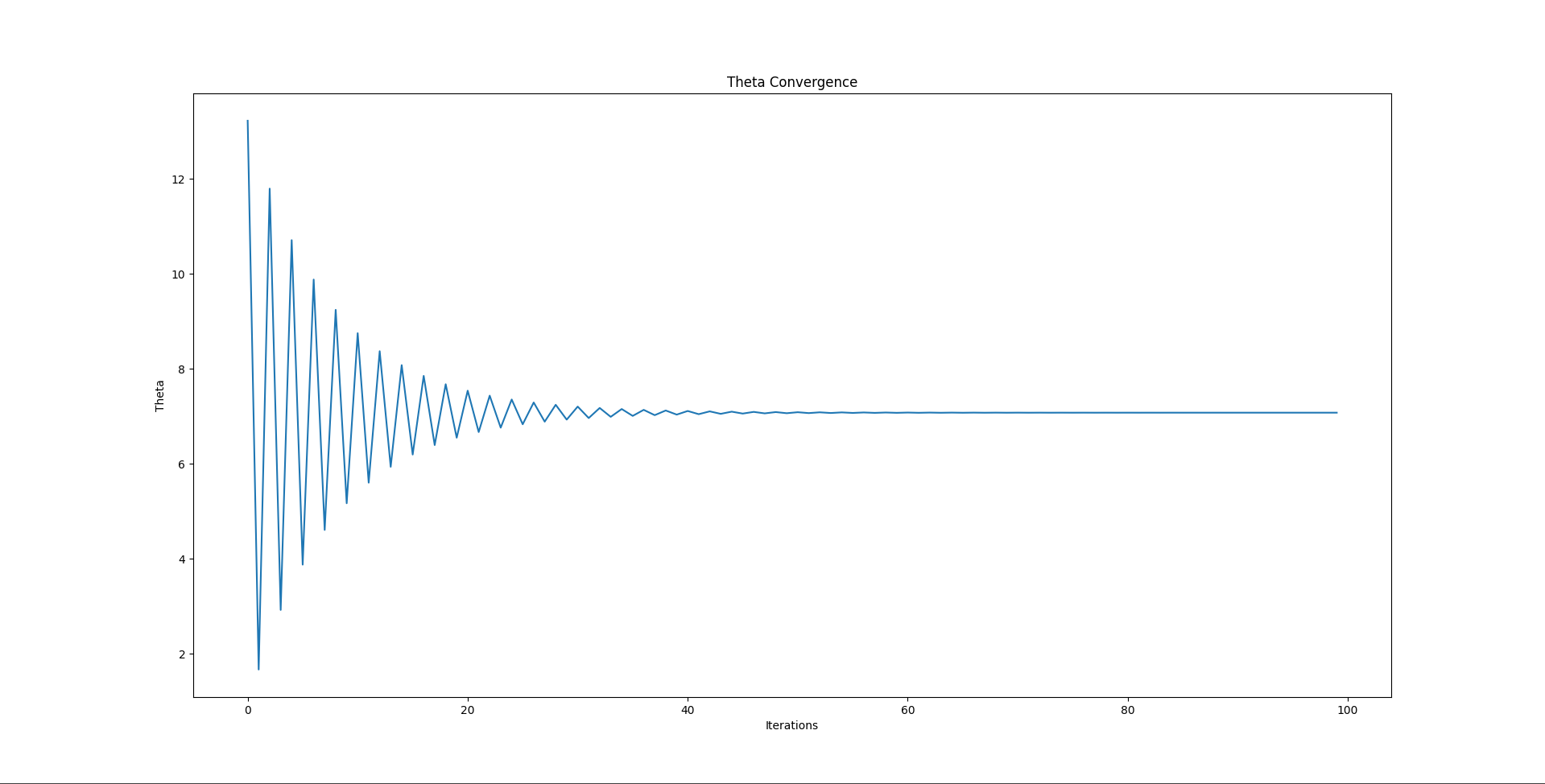

In the autonomous section, we scored our preload cone on a tall pole, but intense arm oscillation meant we missed the first cone from the stack on drop-off and the second cone from the stack on pickup. We also overshot parking again, bringing our total in this period to 5 points.

In the tele-op and endgame section, we scored two cones on the tall poles. So with ownership points, our total in this section was 16 points. We did miss one cone drop-off, though, and our pickups in the substation could have been better as we tipped over a couple of cones during pickup.

Total Points: 21

Analysis: Parking still needed to be tuned, but the cone scoring was a lot more consistent, but still requires a bit more work to achieve consistency. Other than that, improved driver practice will help cycle times and scoring.

Match 5: 51 to 24 Win

In the autonomous section, our arm never engaged to go and score our preload, and the robot did park, meaning we scored 20 points in this section.

In the tele-op and endgame section, we scored two cones on the tall poles, one cone on the medium poles, and missed three on drop-off. We also scored our beacon, bringing our total point count in this game portion to 30 points.

Total Points: 50

Analysis: Pretty solid match, but the autonomous still needs tuning, and driver practice on cone drop-offs could be better.

Match 6: 74 to 50 Loss

In the autonomous section, our robot missed the drop off of 2 cones but parked, bringing the total to 20 points for autonomous.

In the tele-op and endgame section, miscommunication with our alliance partner led to them knocking our cones out of the substation and blocking our intake path multiple times. We scored three cones on the tall poles and missed two on drop-off. In the end, we scored the beacon, scoring 30 points in this section.

Total Points: 50(we carried)

Analysis: Communication with our alliance partner was a significant issue in this match. Our alliance partner crossed onto our side and occupied the substation for a while, blocking our human player from placing down cones and blocking our intake path. This led to valuable seconds being wasted.

Overall, our main issues revolved around autonomous code tuning; although our autonomous performance did improve, poor driver practice we chalk up to fatigue, along with minor tipping and communication issues. However, we plan to understand what happened and solve our problems before the next week’s Tournament.

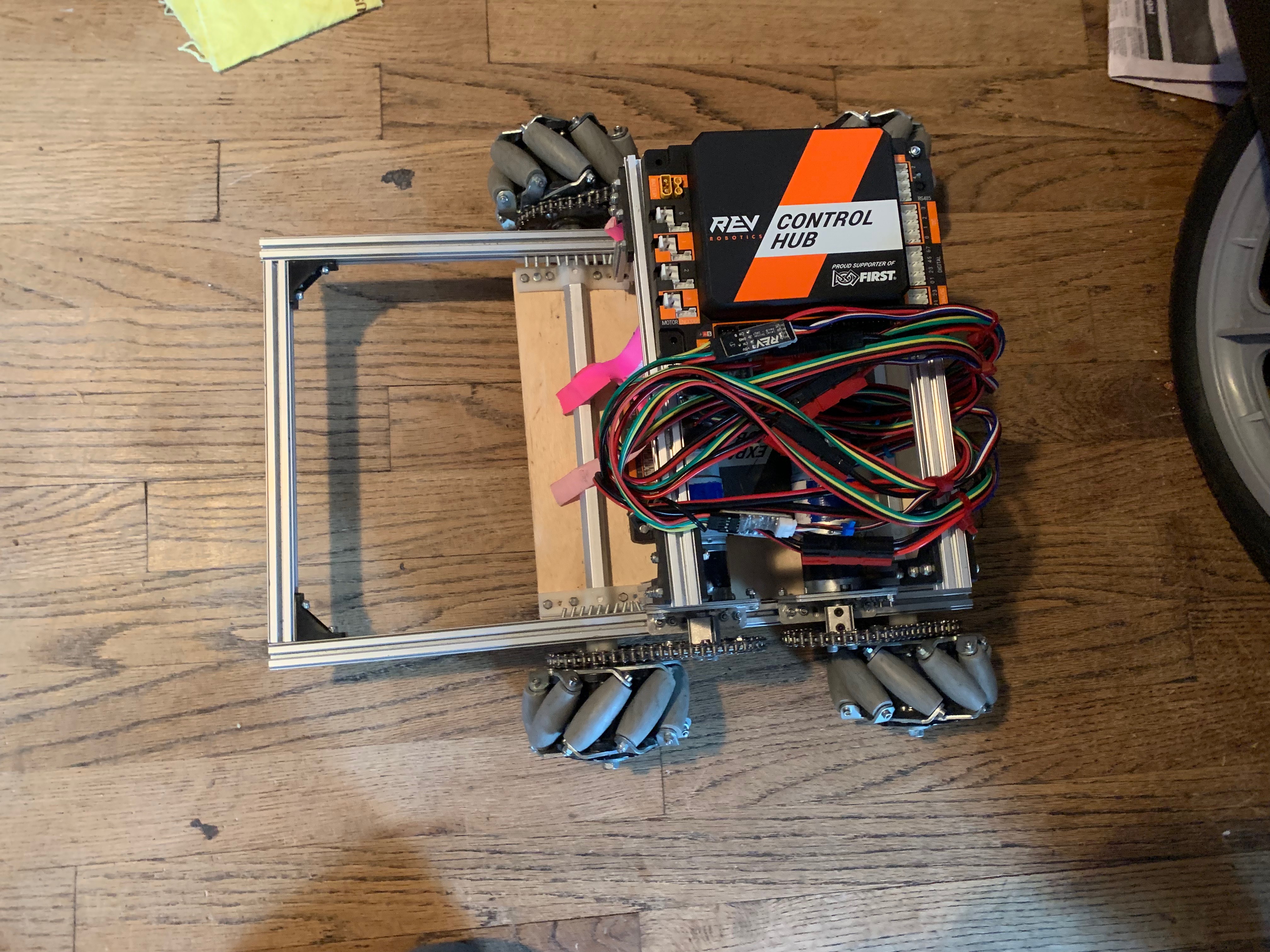

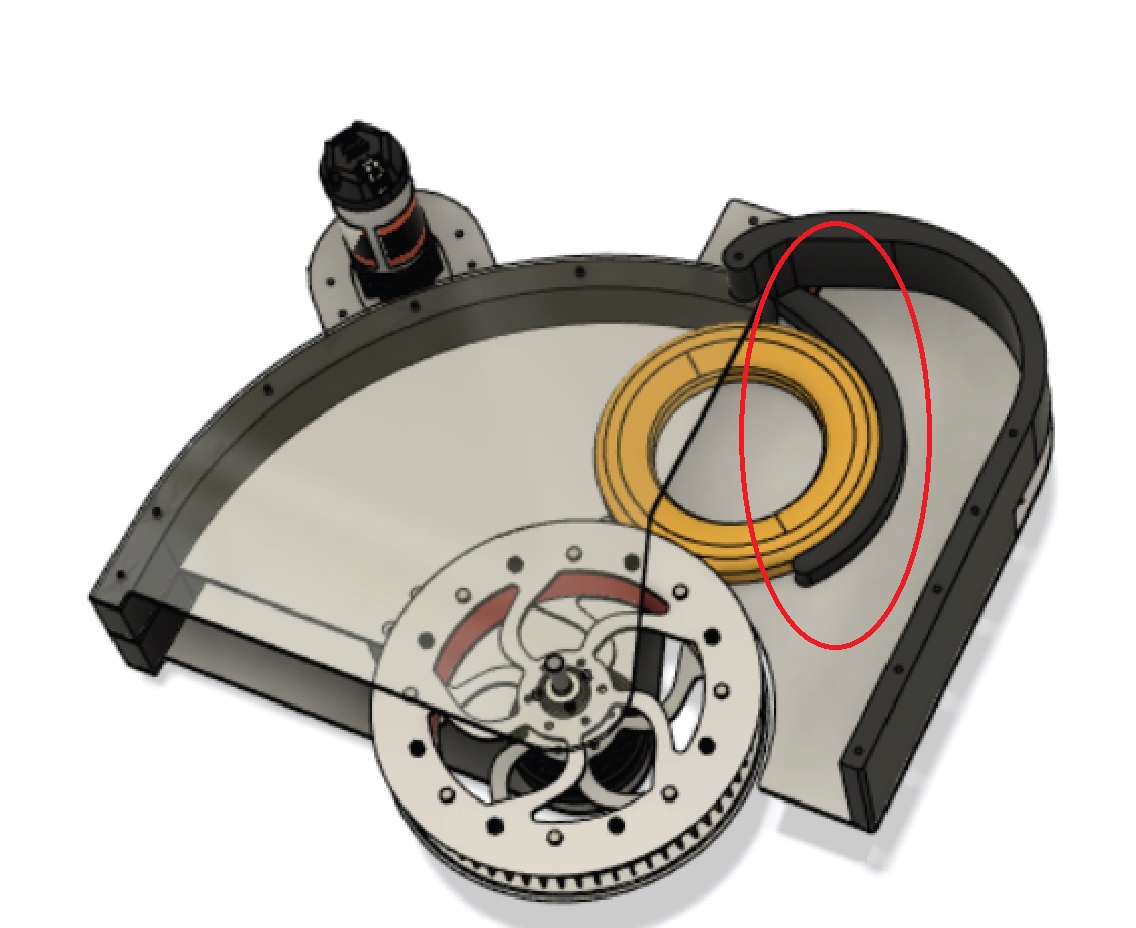

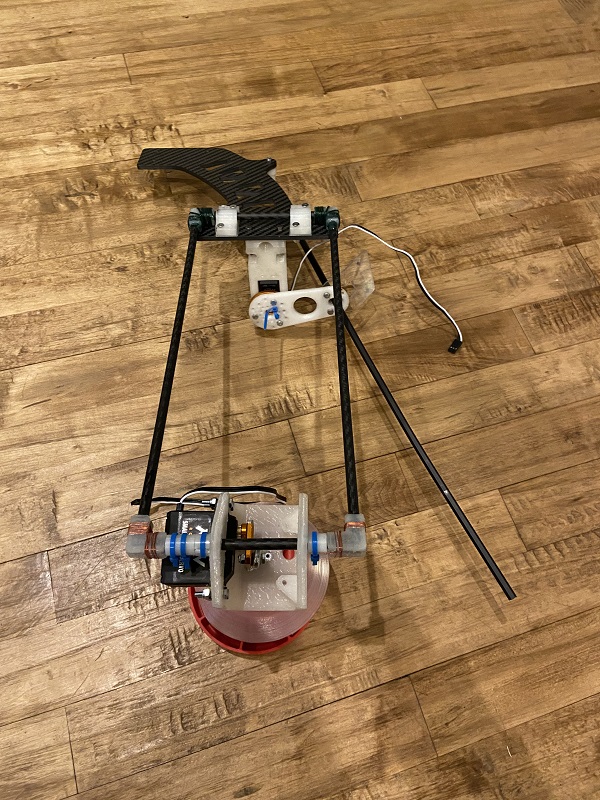

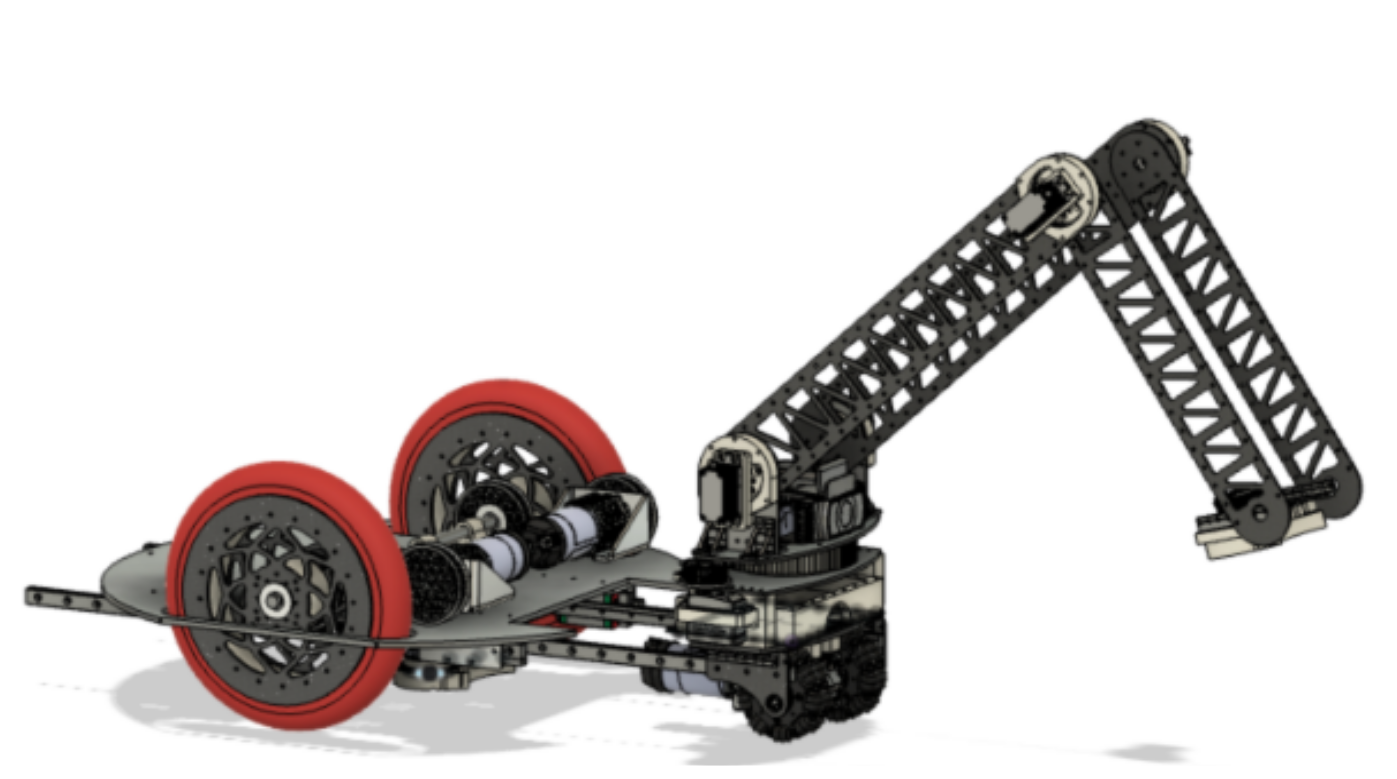

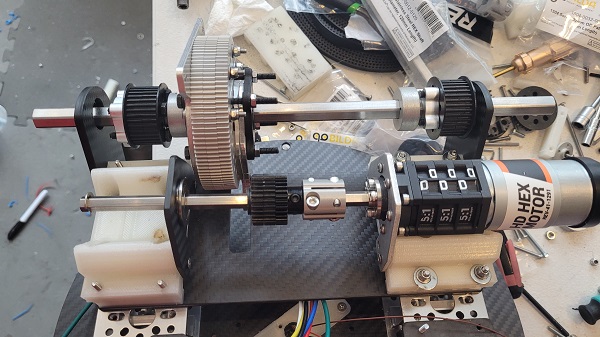

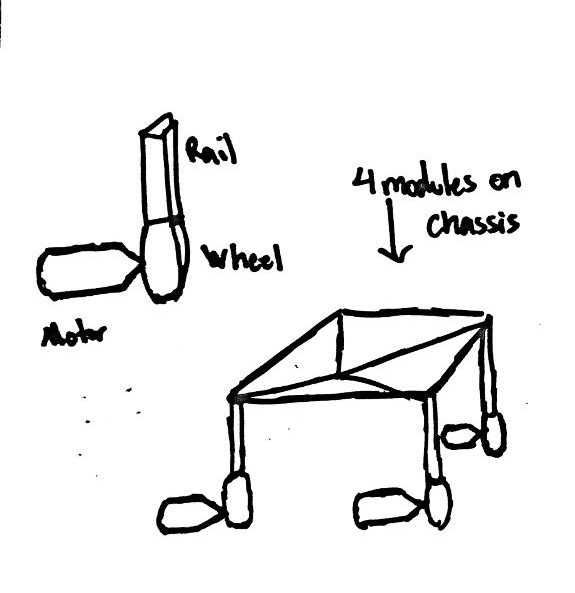

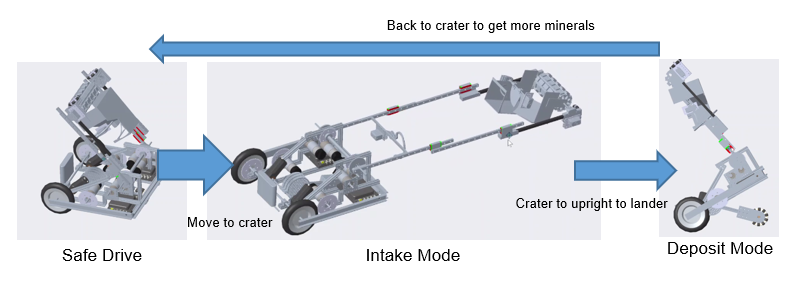

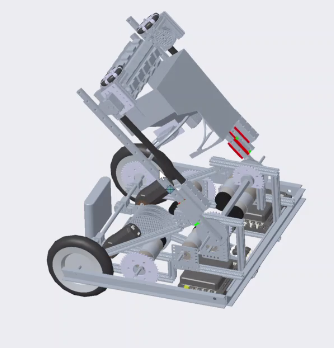

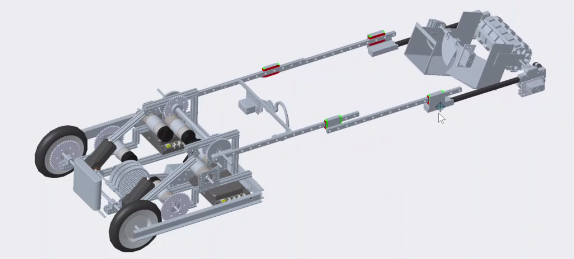

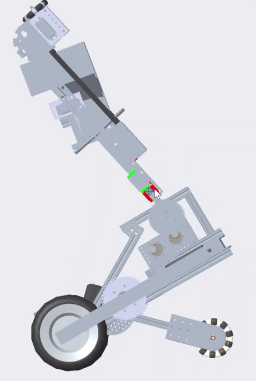

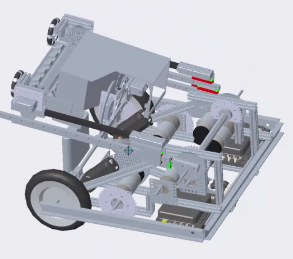

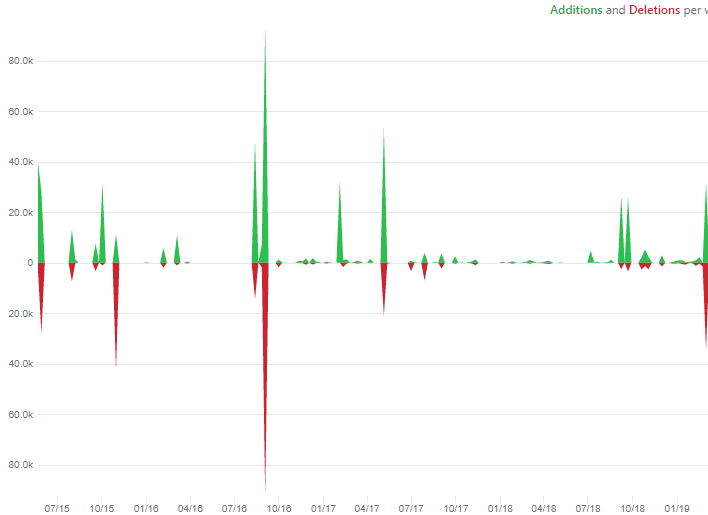

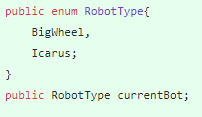

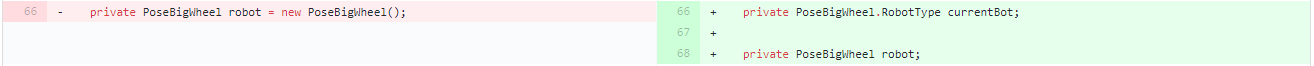

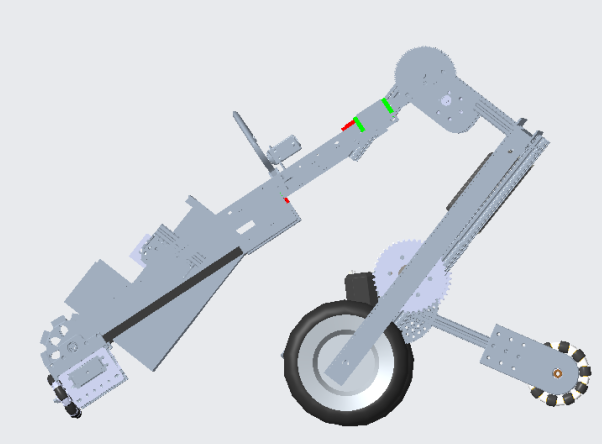

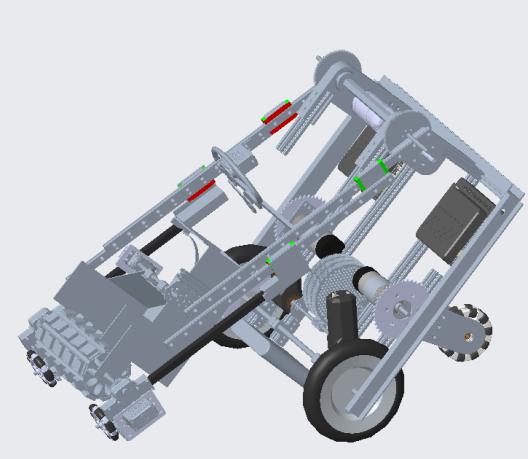

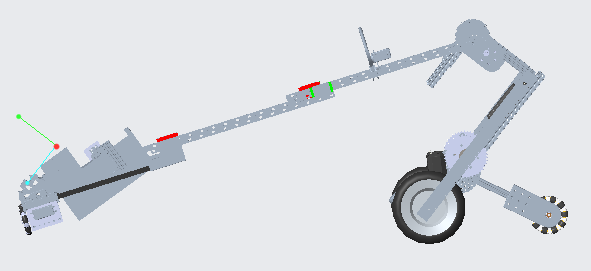

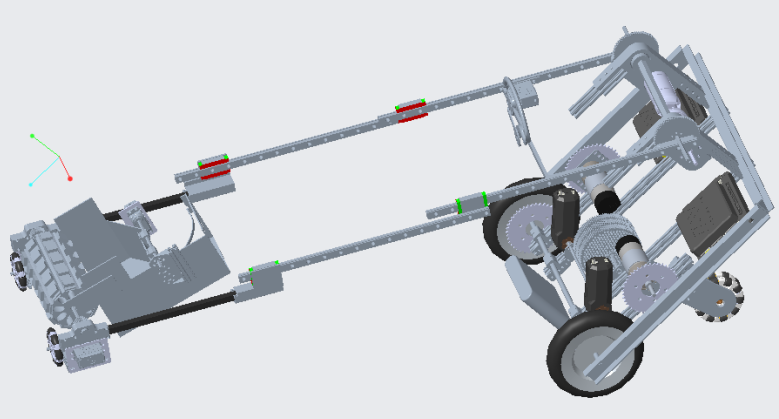

Then, for a quick update on TauBot2, parts of the manufacturing and build have begun, and we hope to incorporate at least part of the new design into the robot we bring to the Tournament on the 28th. Currently, parts of the Chassis and UnderArm have been CNC’ed or 3D printed, and assembly has begun.

Next Steps

Finish up the design of Tau2 and start manufacturing and assembling it, tuning our autonomous and anti-tipping code, and attempting to get more driver practice in preparation for next week’s Tournament.

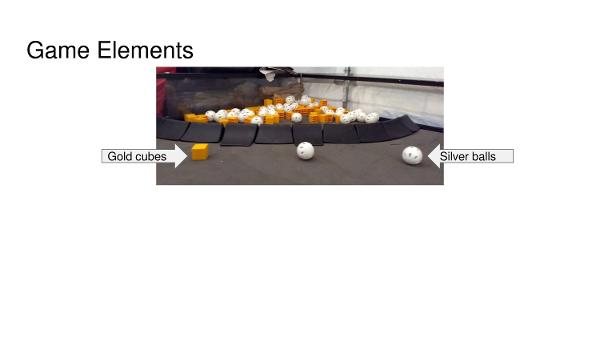

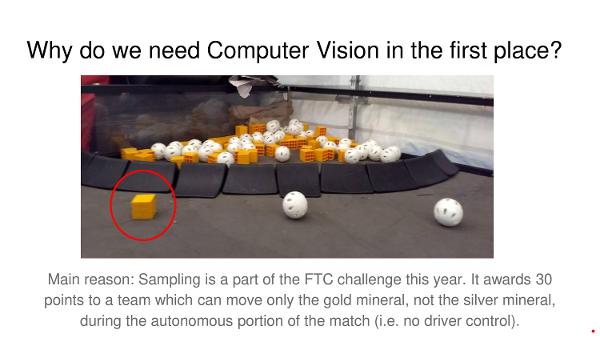

This suggestion uses a plastic flap to "trap" game elements inside it, similar to the lid of a soda cup. You can put marbles through the straw-hole, but you can't easily get them back out.

This suggestion uses a plastic flap to "trap" game elements inside it, similar to the lid of a soda cup. You can put marbles through the straw-hole, but you can't easily get them back out.

This one is simple - a linear slide arm attached to a motor so that it can pick up game elements and rotate. We fear, however, that many teams will adopt this strategy, so we probably won't do it. One unique part of our design would be the silicone grips, so that the "claws" can firmly grasp the silver and gold.

This one is simple - a linear slide arm attached to a motor so that it can pick up game elements and rotate. We fear, however, that many teams will adopt this strategy, so we probably won't do it. One unique part of our design would be the silicone grips, so that the "claws" can firmly grasp the silver and gold.

When we did Res-Q, we dropped our robot more times than we'd like to admit. To prevent that, we're designing an interlocking mechanism that the robot can use to hang. It'll have an indent and a corresponding recess that resists lateral force by nature of the indent, but can be opened easily.

When we did Res-Q, we dropped our robot more times than we'd like to admit. To prevent that, we're designing an interlocking mechanism that the robot can use to hang. It'll have an indent and a corresponding recess that resists lateral force by nature of the indent, but can be opened easily.

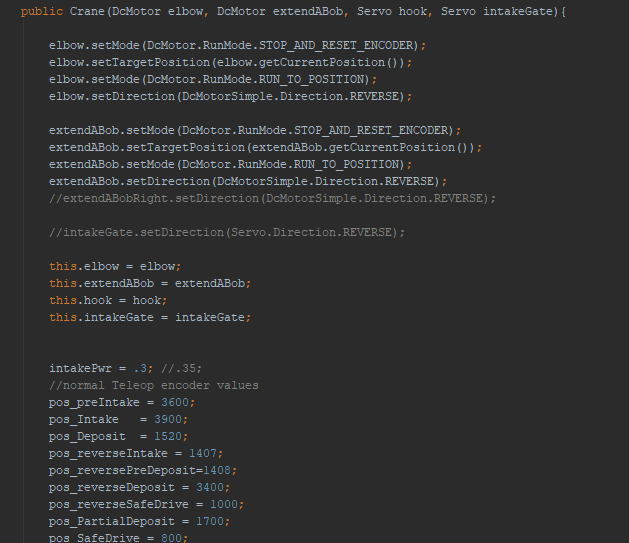

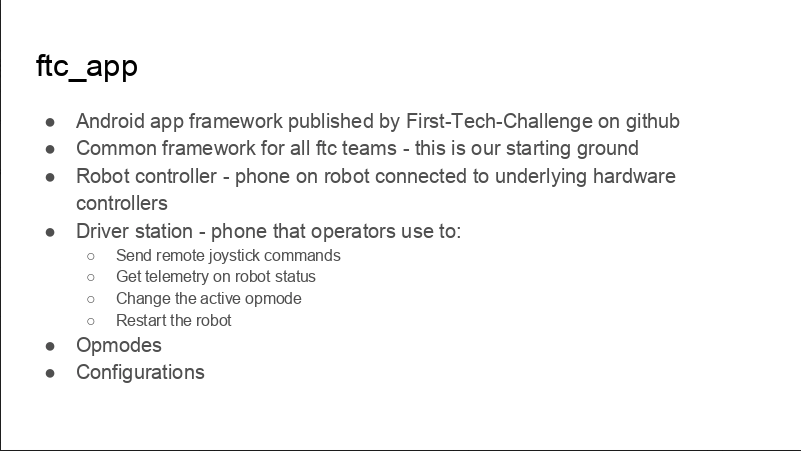

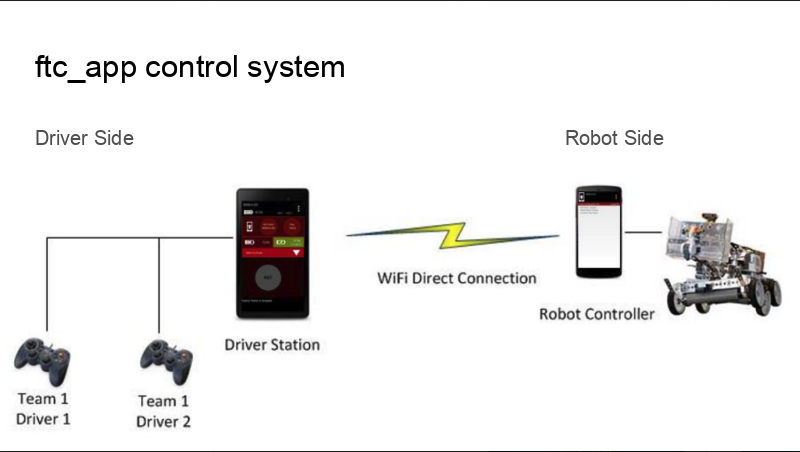

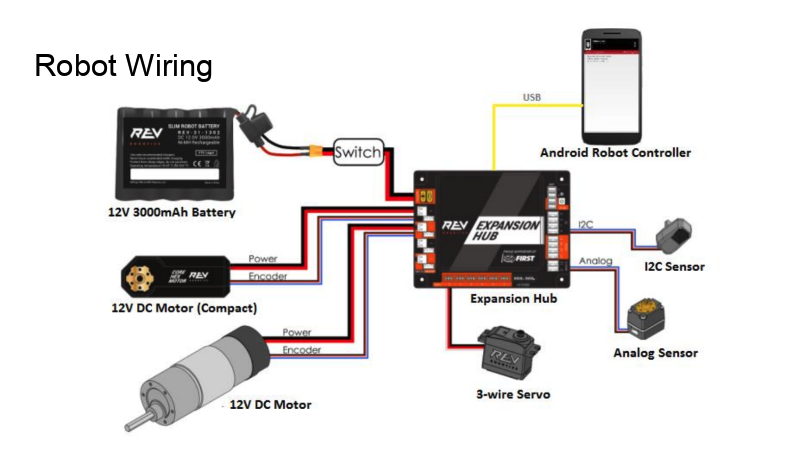

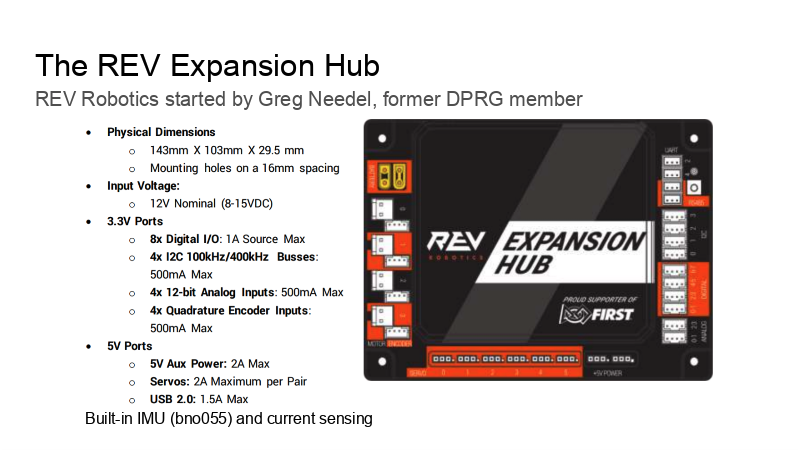

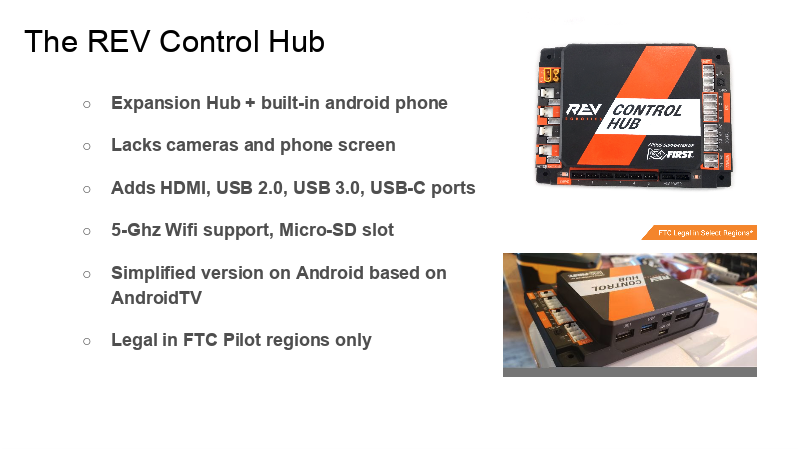

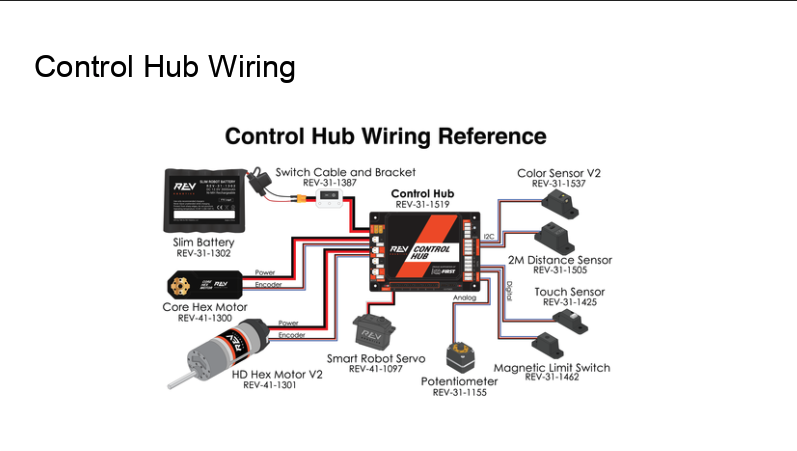

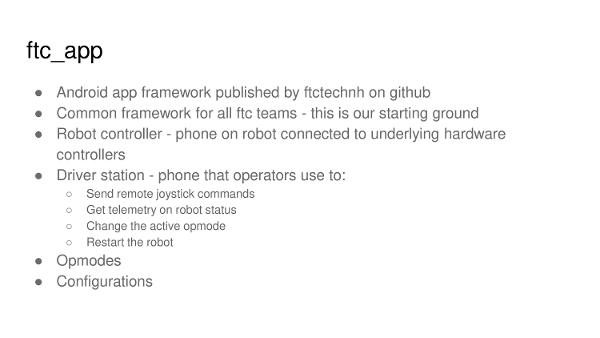

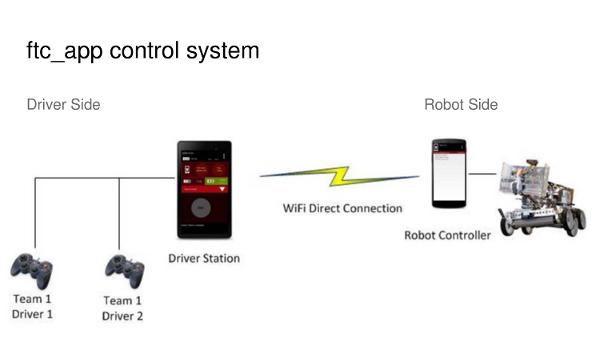

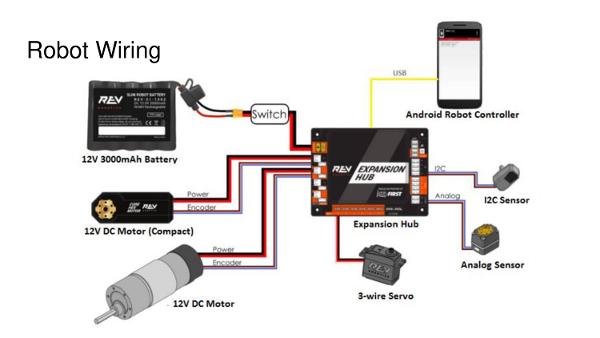

Iron Reign was recently selected to attend a REV Control Hub trial along with select other teams in the region. We wanted to do this so that we could get a good look at the control system that FTC would likely be switching to in the near future, as well as get another chance to test our robot in tournament conditions before Worlds.

Iron Reign was recently selected to attend a REV Control Hub trial along with select other teams in the region. We wanted to do this so that we could get a good look at the control system that FTC would likely be switching to in the near future, as well as get another chance to test our robot in tournament conditions before Worlds.

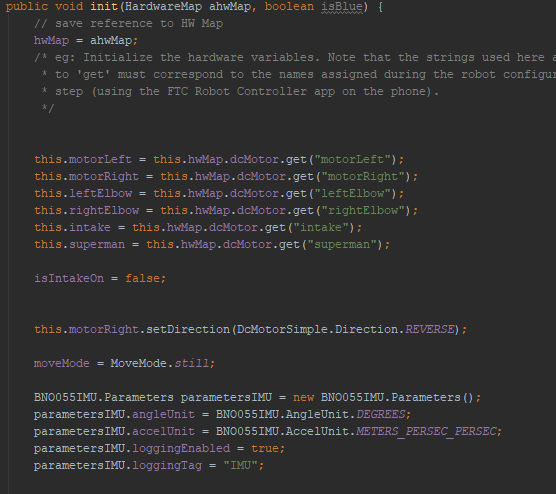

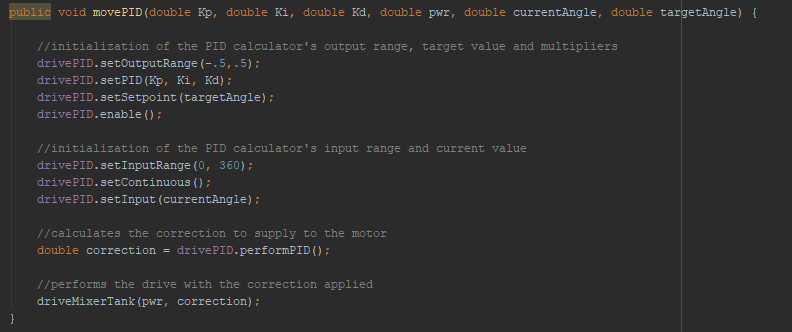

While testing it, we didn't have time to copy over our entire codebase, so we made a quick OpMode that moved one wheel of one of our old robots. Because the provided SDK is almost identical to ftc_app, no changes were needed to the existing sample OpModes. We successfully tested our OpMode, proving that it works fine with the new system.

While testing it, we didn't have time to copy over our entire codebase, so we made a quick OpMode that moved one wheel of one of our old robots. Because the provided SDK is almost identical to ftc_app, no changes were needed to the existing sample OpModes. We successfully tested our OpMode, proving that it works fine with the new system.